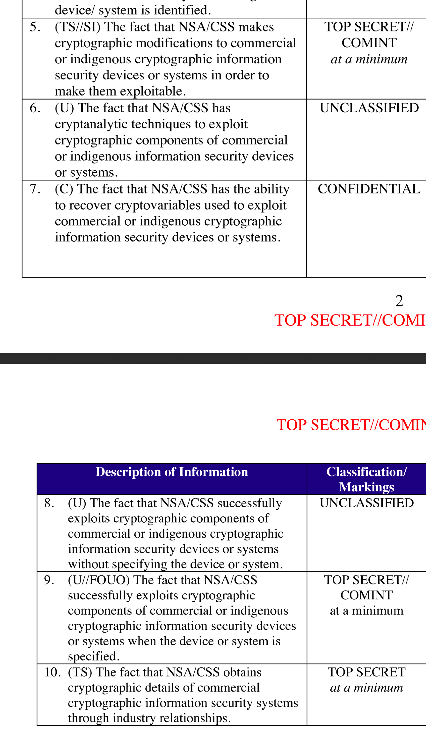

October 19, 2018

AES was worth $250 billion dollars

So says NIST...

10 years ago I annoyed the entire crypto-supply industry:

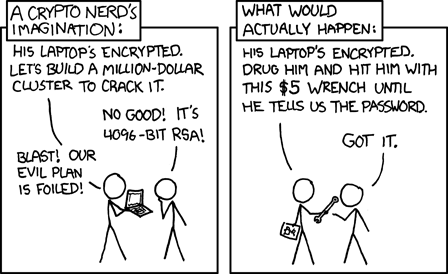

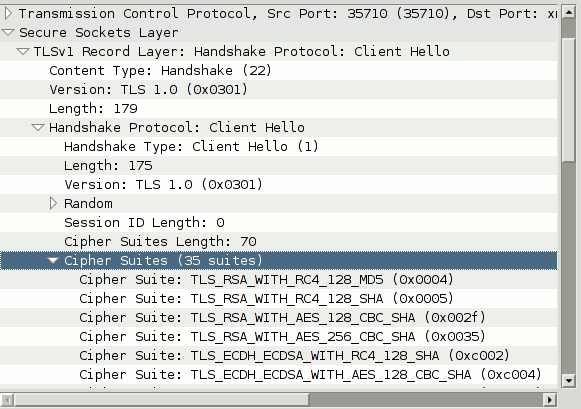

Hypothesis #1 -- The One True Cipher Suite In cryptoplumbing, the gravest choices are apparently on the nature of the cipher suite. To include latest fad algo or not? Instead, I offer you a simple solution. Don't.

There is one cipher suite, and it is numbered Number 1.

Cypersuite #1 is always negotiated as Number 1 in the very first message. It is your choice, your ultimate choice, and your destiny. Pick well.

The One True Cipher Suite was born of watching projects and groups wallow in the mire of complexity, as doubt caused teams to add multiple algorithms- a complexity that easily doubled the cost of the protocol with consequent knock-on effects & costs & divorces & breaches & wars.

It - The One True Cipher Suite as an aphorism - was widely ridiculed in crypto and standards circles. Developers and standards groups like the IETF just could not let go of crypto agility, the term that was born to champion the alternate. This sacred cow led the TLS group to field something like 200 standard suites in SSL and radically reduce them to 30 or 40 over time.

Now, NIST has announced that AES as a single standard algorithm is worth $250 billion economic benefit over 20 years of its project lifetime - from 1998 to now.

h/t to Bruce Schneier, who also said:

"I have no idea how to even begin to assess the quality of the study and its conclusions -- it's all in the 150-page report, though -- but I do like the pretty block diagram of AES on the report's cover."

One good suite based on AES allows agility within the protocol to be dropped. Entirely. Instead, upgrade the entire protocol to an entirely new suite, every 7 years. I said, if anyone was asking. No good algorithm lasts less than 7 years.

Crypto-agility was a sacred cow that should have been slaughtered years ago, but maybe it took this report from NIST to lay it down: $250 billion of benefit.

In another footnote, we of the Cryptix team supported the AES project because we knew it was the way forward. Raif built the Java test suite and others in our team wrote and deployed contender algorithms.

February 27, 2017

Today I’m trying to solve my messaging problem...

Financial cryptography is that space between crypto and finance which by nature of its inclusiveness of all economic activities, is pretty close to most of life as we know it. We bring human needs together with the net marketplace in a secure fashion. It’s all interconnected, and I’m not talking about IP.

Today I’m trying to solve my messaging problem. In short, tweak my messaging design to better supports the use case or community I have in mind, from the old client-server days into a p2p world. But to solve this I need to solve the institutional concept of persons, i.e. those who send messages. To solve that I need an identity framework. To solve the identity question, I need to understand how to hold assets, as an asset not held by an identity is not an asset, and an identity without an asset is not an identity. To resolve that, I need an authorising mechanism by which one identity accepts another for asset holding, that which banks would call "onboarding" but it needs to work for people not numbers, and to solve that I need a voting solution. To create a voting solution I need a resolution to the smart contracts problem, which needs consensus over data into facts, and to handle that I need to solve the messaging problem.

Bugger.

A solution cannot therefore be described in objective terms - it is circular, like life, recursive, dependent on itself. Which then leads me to thinking of an evolutionary argument, which, assuming an argument based on a higher power is not really on the table, makes the whole thing rather probabilistic. Hopefully, the solution is more probabilistically likely than human evolution, because I need a solution faster than 100,000 years.

This could take a while. Bugger.

November 15, 2015

the Satoshi effect - Bitcoin paper success against the academic review system

One of the things that has clearly outlined the dilemma for the academic community is that papers that are self-published or "informally published" to borrow a slur from the inclusion market are making some headway, at least if the Bitcoin paper is a guide to go by.

Here's a quick straw poll checking a year's worth of papers. In the narrow field of financial cryptography, I trawled through FC conference proceedings in 2009, WEIS 2009. For Cryptology in general I added Crypto 2009. I used google scholar to report direct citations, and checked what I'd found against Citeseer (I also added the number of citations for the top citer in rightmost column, as an additional check. You can mostly ignore that number.) I came across Wang et al's paper from 2005 on SHA1, and a few others from the early 2000s and added them for comparison - I'm unsure what other crypto papers are as big in the 2000s.

| Conf | paper | Google Scholar | Citeseer | top derivative citations |

|---|---|---|---|---|

| jMLR 2003 | Latent dirichlet allocation | 12788 | 2634 | 26202 |

| NIPS 2004 | MapReduce: simplified data processing on large clusters | 15444 | 2023 | 14179 |

| CACM 1981 | Untraceable electronic mail, return addresses, and digital pseudonyms | 4521 | 1397 | 3734 |

| self | Security without identification: transaction systems to make Big Brother obsolete | 1780 | 470 | 2217 |

| Crypto 2005 | Finding collisions in the full SHA-1 | 1504 | 196 | 886 |

| SIGKDD 2009 | The WEKA data mining software: an update | 9726 | 704 | 3099 |

| STOC 2009 | Fully homomorphic encryption using ideal lattices | 1923 | 324 | 770 |

| self | Bitcoin: A peer-to-peer electronic cash system | 804 | 57 | 202 |

| Crypto09 | Dual System Encryption: Realizing Fully Secure IBE and HIBE under Simple Assumptions | 445 | 59 | 549 |

| Crypto09 | Fast Cryptographic Primitives and Circular-Secure Encryption Based on Hard Learning Problems | 223 | 42 | 485 |

| Crypto09 | Distinguisher and Related-Key Attack on the Full AES-256 | 232 | 29 | 278 |

| FC09 | Secure multiparty computation goes live | 191 | 25 | 172 |

| WEIS 2009 | The privacy jungle: On the market for data protection in social networks | 186 | 18 | 221 |

| FC09 | Private intersection of certified sets | 84 | 24 | 180 |

| FC09 | Passwords: If We’re So Smart, Why Are We Still Using Them? | 89 | 16 | 322 |

| WEIS 2009 | Nobody Sells Gold for the Price of Silver: Dishonesty, Uncertainty and the Underground Economy | 82 | 24 | 275 |

| FC09 | Optimised to Fail: Card Readers for Online Banking | 80 | 24 | 226 |

What can we conclude? Within the general infosec/security/crypto field in 2009, the Bitcoin paper is the second paper after Fully homomorphic encryption (which is probably not even in use?). If one includes all CS papers in 2009, then it's likely pushed down a 100 or so slots according to citeseer although I didn't run that test.

If we go back in time there are many more influential papers by citations, but there's a clear need for time. There may well be others I've missed, but so far we're looking at one of a very small handful of very significant papers at least in the cryptocurrency world.

It would be curious if we could measure the impact of self-publication on citations - but I don't see a way to do that as yet.

June 28, 2015

The Nakamoto Signature

The Nakamoto Signature might be a thing. In 2014, the Sidechains whitepaper by Back et al introduced the term Dynamic Membership Multiple-party Signature or DMMS -- because we love complicated terms and long impassable acronyms.

Or maybe we don't. I can never recall DMMS nor even get it right without thinking through the words; in response to my cognitive poverty, Adam Back suggested we call it a Nakamoto signature.

That's actually about right in cryptology terms. When a new form of cryptography turns up and it lacks an easy name, it's very often called after its inventor. Famous companions to this tradition include RSA for Rivest, Shamir, Adleman; Schnorr for the name of the signature that Bitcoin wants to move to. Rijndael is our most popular secret key algorithm, from the inventors names, although you might know it these days as AES. In the old days of blinded formulas to do untraceable cash, the frontrunners were signatures named after Chaum, Brands and Wagner.

On to the Nakamoto signature. Why is it useful to label it so?

Because, with this literary device, it is now much easier to talk about the blockchain. Watch this:

The blockchain is a shared ledger where each new block of transactions - the 10 minutes thing - is signed with a Nakamoto signature.

Less than 25 words! Outstanding! We can now separate this discussion into two things to understand: firstly: what's a shared ledger, and second: what's the Nakamoto signature?

Each can be covered as a separate topic. For example:

the shared ledger can be seen as a series of blocks, each of which is a single document presented for signature. Each block consists of a set of transactions built on the previous set. Each succeeding block changes the state of the accounts by moving money around; so given any particular state we can create the next block by filling it with transactions that do those money moves, and signing it with a Nakamoto signature.

Having described the the shared ledger, we can now attack the Nakamoto signature:

A Nakamoto signature is a device to allow a group to agree on a shared document. To eliminate the potential for inconsistencies aka disagreement, the group engages in a lottery to pick one person's version as the one true document. That lottery is effected by all members of the group racing to create the longest hash over their copy of the document. The longest hash wins the prize and also becomes a verifiable 'token' of the one true document for members of the group: the Nakamoto signature.

That's it, in a nutshell. That's good enough for most people. Others however will want to open that nutshell up and go deeper into the hows, whys and whethers of it all. You'll note I left plenty of room for argument above; Economists will look at the incentive structure in the lottery, and ask if a prize in exchange for proof-of-work is enough to encourage an efficient agreement, even in the presence of attackers? Computer scientists will ask 'what happens if...' and search for ways to make it not so. Entrepreneurs might be more interested in what other documents can be signed this way. Cryptographers will pounce on that longest hash thing.

But for most of us we can now move on to the real work. We haven't got time for minutia. The real joy of the Nakamoto signature is that it breaks what was one monolithic incomprehensible problem into two more understandable ones. Divide and conquer!

The Nakamoto signature needs to be a thing. Let it be so!

NB: This article was kindly commented on by Ada Lovelace and Adam Back.

April 29, 2015

The Sum of All Chains - Let's Converge!

Here's a rough transcript of a recent talk I did for CoinScrum & Proof of Work's Tools for the Future (video).

I was asked to pick my own topic, and what I was really interested in were some thoughts as to where we are going with the architecture of the blockchain and so forth. Something that has come out of my thoughts is whether we can converge all the different ideas out there in identification terms. It's a thought process, it's coming bit by bit, it's really quite young in its evolution. A lot of people might call it complete crap, but here it is anyway. |  |

let's converge... Imagine there is a person. We can look at a picture, what else is there to say? Not a lot. But imagine, now we've got another person. This one is Bob, who is male and blue. |  |

What does that mean? Well what it really means is that if we've got two people, we've got some differences, and we might have to give these people names. Naming only becomes important when we've got a few things, a lot of things, which is somewhat critical in some areas. What then is Identity about? In this context it is about the difference between these entities, Alice versus Bob. Things we can look at with any particular person include: Age, gender, male versus female. We could just do the old ontology thing and collect all this information together into a database, and that should be sufficient. |  |

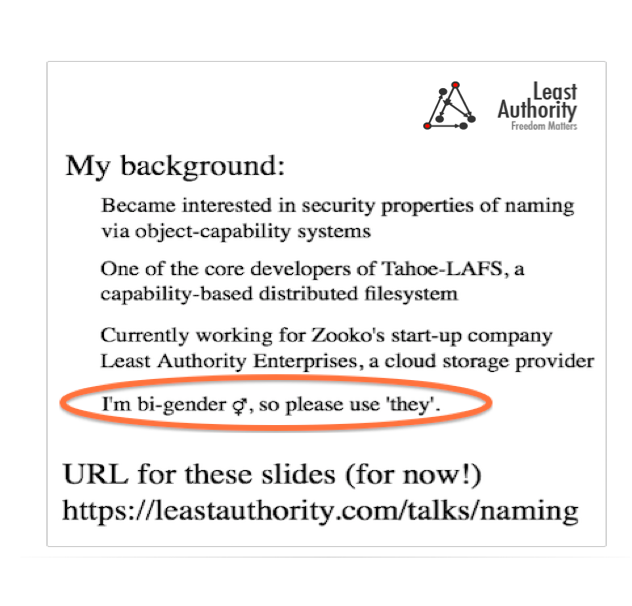

But things can be a bit tricky. Gender used to quite simple. Now it can be unknown, unstated, it can change, as a relatively new phenomena. On this random slide off the net, down there on the right, it says "I'm bi-gender, so please use they." This brave person, they've gone out on the net, they're interested in secure properties of naming via object capability systems, which is really interesting as that's what we're talking about here, and they want us to refer to them as they. |  |

This really mucks up gender completely as we can no longer use binary assumptions. How do we deal with this? There's a trap here, a trap of over-measurement, a trap of ontology, which leads to paralysis if we're not careful. |  |

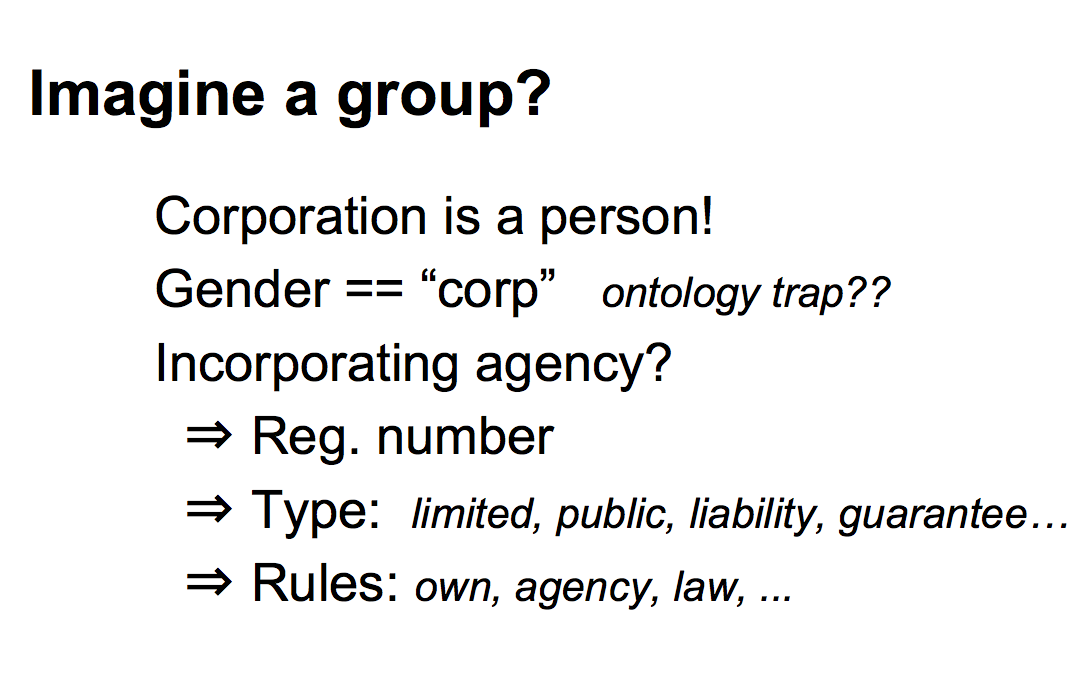

Imagine a group of people? What happens when we get a group of people together - it can be called an incorporation, we might need a number, we might need a type. We're going to need rules and regulations, or at least someone will impose regulations over the top of us. We've got quite a lot of information to collect. |  |

We can go further afield. In times historical, we might recall the old sea stories, where they would talk about the ships having names and the engines having names. In modern times, computers have names as do programs. Eliza is a famous old psychology program, and Hal and Skynet come from movies. When the Internet started up it seemed as if every second machine was named Gandalf. There's lots of attributes we can collect about these things as well -- can we identify machines in the same way that we can identify people? Why not? |  |

Let's talk about a smart contract. It's out there, it exists, it's permanent, it's got some sense of reality, so whatever we think it is -- I personally think it is a state machine with money, and there are lots of people hunting around for definitions to try and understand this -- whatever we think it is, we can measure it. We have to be able to find it, we have to be able talk to it. We can find its code, and certainly in the blockchain instantiation of the concept, it's code is everywhere, and its running. We can find its state, if it's a multistate machine, and those are the interesting ones I think. And it probably has to have a name, because it probably needs to market itself out to the world, and you can only really do that with a name. We can collect this information. |  |

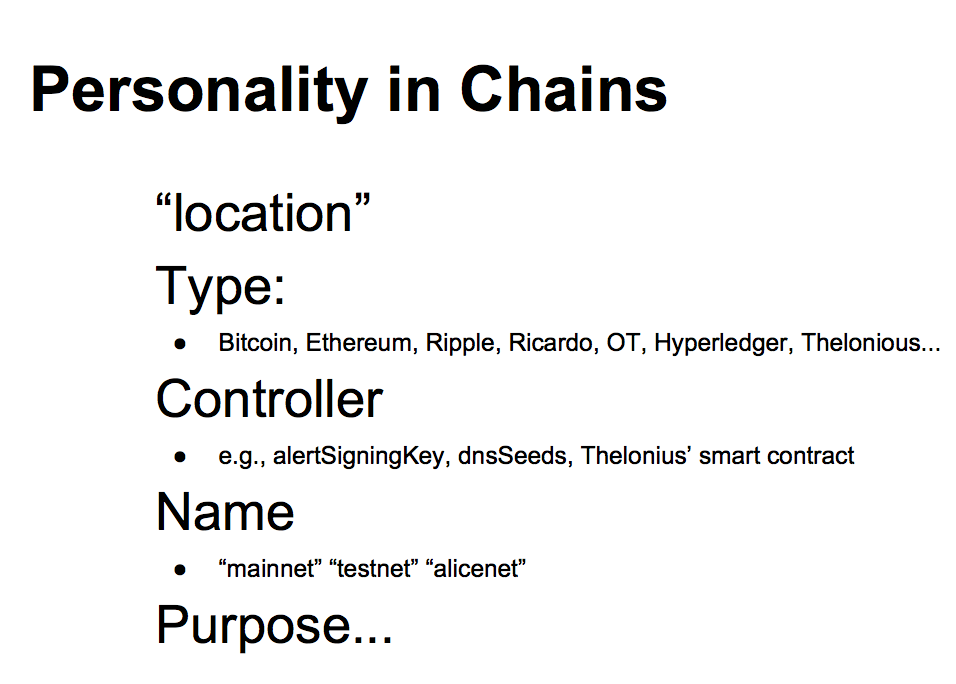

If we can do a smart contract, what can't we do a blockchain? With a blockchain, there's certainly a location, there's a bunch of IP numbers out there, a bunch of places we can talk to. There's a lot of technology types out there, and these are important in interpreting the results that come back from it, or how to talk to them, protocols and so forth. There are lots of different types. There's a controller, or there's probably a controller. Bitcoin for example has alertSigningKeys and dnsSeeds. Thelonious for some reasons has a smart contract at the beginning, which is interesting in and of itself. |  |

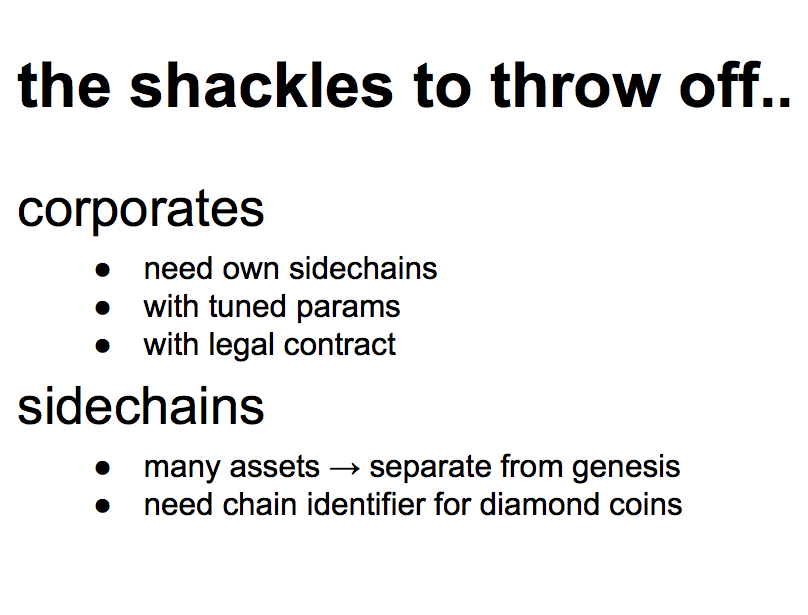

And, blockchains have a name. Probably a bit of a surprise, there are 4 bitcoin chains. One's called "mainnet", that's the one you're used to using, then there's "testnet" and then a something net and a something-else net. So we can have a "mainnet" can we also have "AliceNet" or "Mynet?" Yes, in theory we can. If you're in the business of doing interesting things with bitcoin, you probably want to talk to corporates, and when you go into talk to corporates, they probably turn around and say "love the concept, but we want our own one! We want to run our own blockchain. We probably want a sidechain, or one of those variants. We want to change the parameters, we want to change it so the performance is different. We want it to go faster, or maybe slower. More security perhaps, or more space. Whatever it is, we want smart contracts rather than money, or money rather than smart contracts, or whatever." | |

|

If we're talking about contracts, they (corporates) probably want a legal environment because they do legal environments with everything else. When you walk into the building, there's a contract. If you walk onto a train platform, there's a contract, it's posted up somewhere. If you buy sweets in a shop, there's a contract. Corporates then likely want some form of contract in their blockchain.

Then there are sidechains. These become interesting because they break a lot of assumptions. The interest behind sidechains has a lot to do with multiple assets. Some people like counterparty or coloured coins are trying to issue multiple assets, and sidechains are trying to do this in a proper fashion. Which means we need to do things like separate out the genesis block out so that it starts the chain, not the currency. We need a separate genesis for the assets. We also need chain identifiers. This is a consequence of moving value from one chain to another, it's a sidechains thing. if you're moving coins between different chains, you then have to move them back again if you want to redeem them on the original chain. They must follow the exact same path, otherwise you get a problem if two coins coming from the same base chain go by different paths and end up on the same sidechain. Something we called diamond coins. |  |

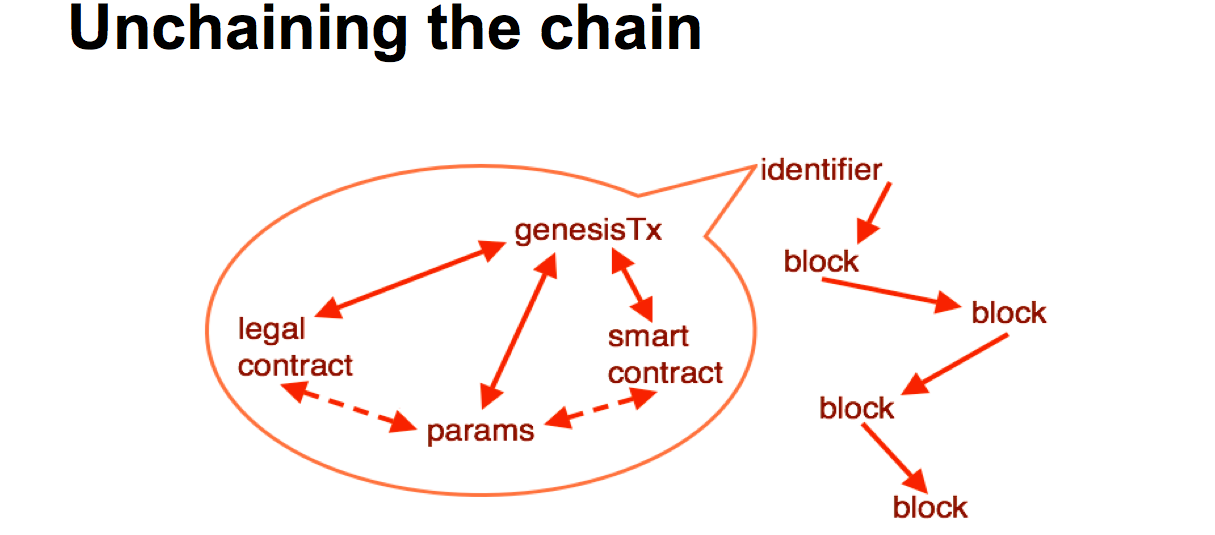

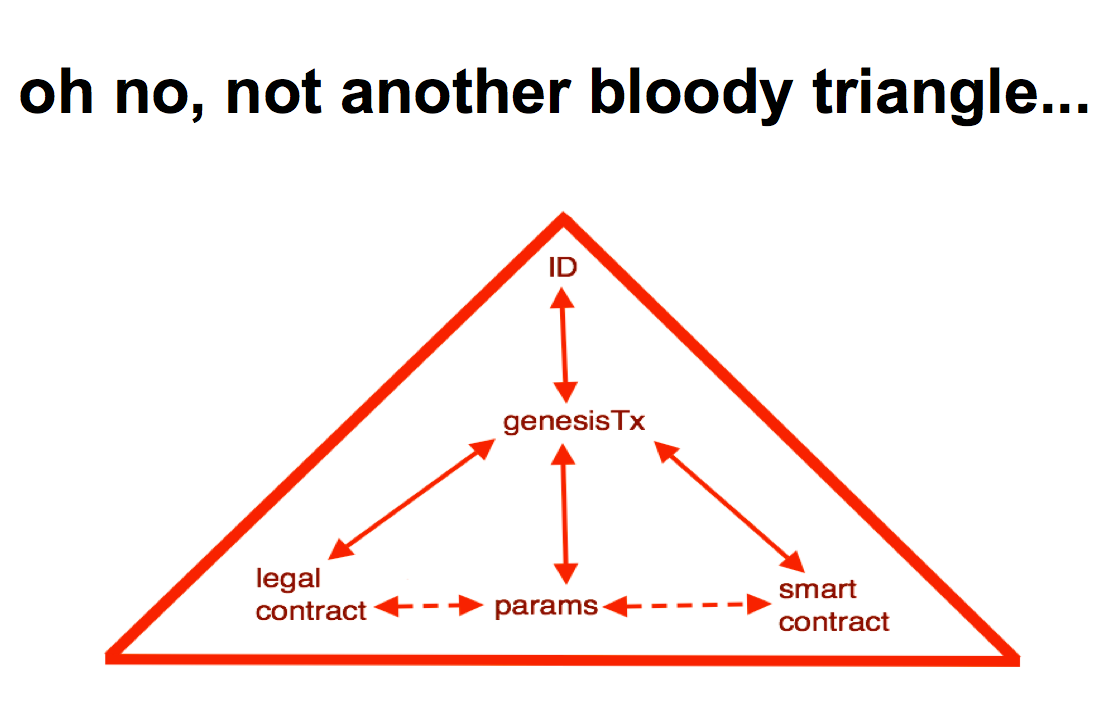

Where is this all going? We need to make some changes. We can look at the blockchain and make a few changes. It sort of works out that if we take the bottom layer, we've got a bunch of parameters from the blockchain, these are hard coded, but they exist. They are in the blockchain software, hard coded into the source code. So we need to get those parameters out into some form of description if we're going to have hundreds, thousands or millions of block chains. It's probably a good idea to stick a smart contract in there, who's purpose is to start off the blockchain, just for that purposes. And having talked about the legal context, when going into the corporate scenario, we probably need the legal contract -- we're talking text, legal terms and blah blah -- in there and locked in. We also need an instantiation, we need an event to make it happen, and that is the genesis transaction. Somehow all of these need to be brought together, locked into the genesis transaction, and out the top pops an identifier. That identifier can then be used by the software in various and many ways. Such as getting the particular properties out to deal with that technology, and moving value from one chain to another. This is a particular picture which is a slight variation that is going on with the current blockchain technology, but it can lead in to the rest of the devices we've talked about. |  |

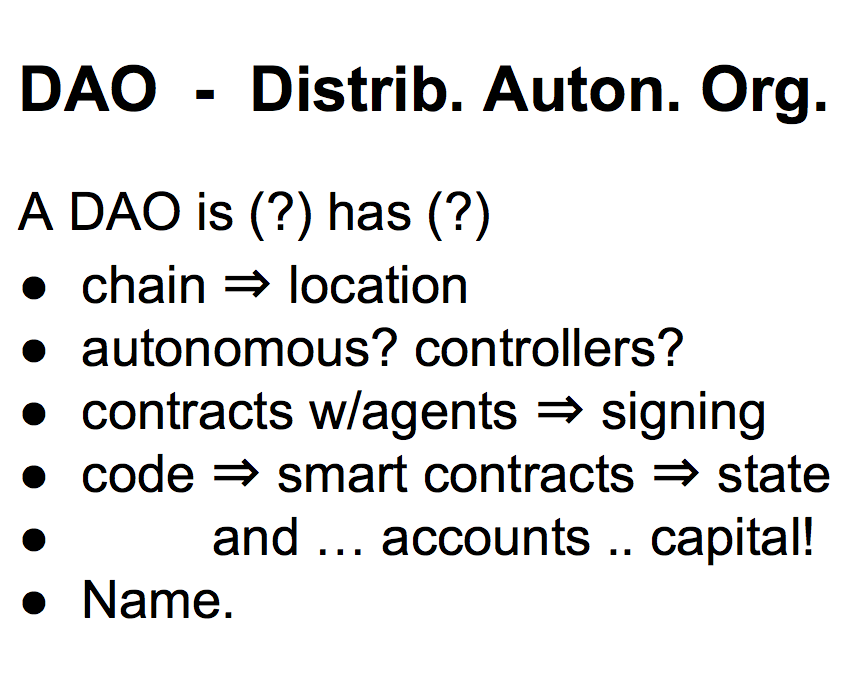

Let's talk about a DAO or distributed autonomous organisation. What is that - I'm not entirely sure what it is, and I don't think anyone else is either. It's a sort of corporation that doesn't have the traditional instantiation we are familiar with, it's a thing out there that does stuff, like a magic corporation. It probably lives on a chain somewhere, so it has a location. It's probably controlled by somebody. It might be autonomous, but who would set it up for autonomous reasons, what's the purpose of that? It doesn't make a lot of sense because these things generally want to make profit so the profit needs to go somewhere. Like corporations today, we expect a controller. It probably needs to make contracts with other DAOs, or other entities out there. Which means it needs to do some signing, which means it needs keys. Code, accounts, capital, it needs a whole bunch of stuff. And it has a name, because it has to market itself. |  |

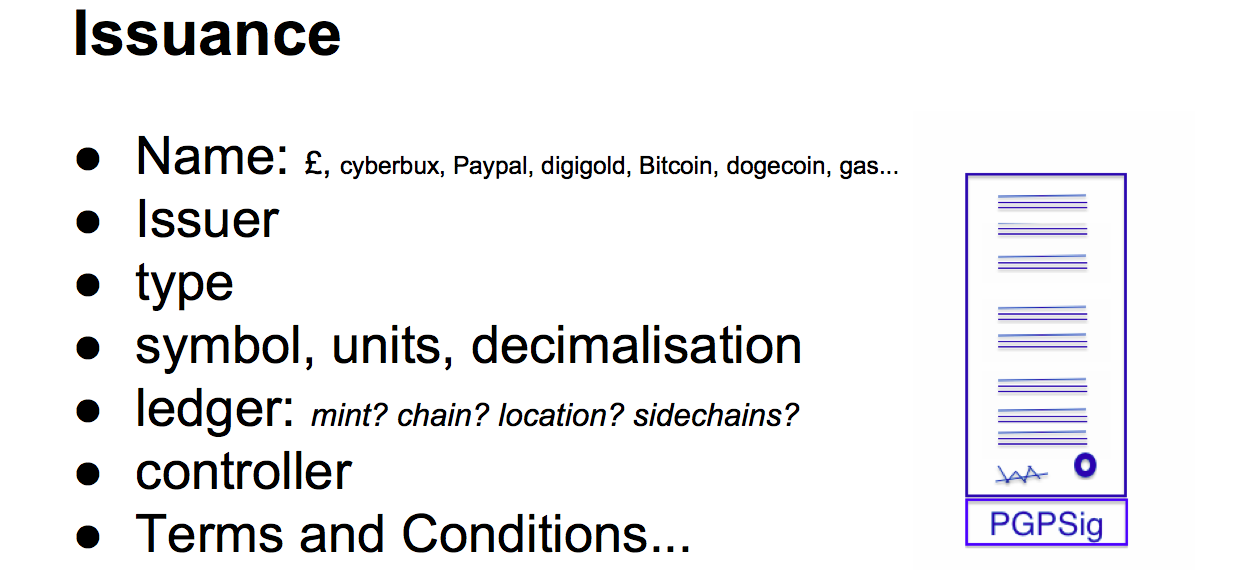

What am I saying here? It kind of looks a lot like the other things above. Issuances have existed in the past, Bitcoin is not the beginning of this space. Before Bitcoin we've had the pound sterling for several hundred years as an issuance. Cyberbux was issued around 1992 as a digital currency. It was given away by David Chaum's company Digicash which issued about 750,000 of them before he was told to stop doing that. Paypal came along as an issuance. Digigold was a gold metal based currency that I was involved with back in the early 2000s, then it was quiet for long time until along came Bitcoin. These issuances all have things in common. They all had an issuer in some sense or other. It is possible to dispute it with Bitcoin, but Satoshi was there starting up the software, so he's essentially the issuer albeit unstated. The issuances have types of technology and types of value such as bonds and shares, etc. There is stuff about symbols to print out, as the user wants to know what it looks like on the screen. Is it GBP, BTC. What are the units, what are the subunits, how many decimal points. We need to know how minting is done. That's hard coded into bitcoin, but it doesn't have to be, it could be in the genesis identity package, so we can tune the parameters. There needs to be a controller, as I pointed out there are some vestiges of control with bitcoin, and future chains will have more control, whereas issuances will have even more control because issuances are typically done by issuers. You want them to be in control because they're making legal claims, which brings us to terms and conditions, so we also need a legal contract. |  |

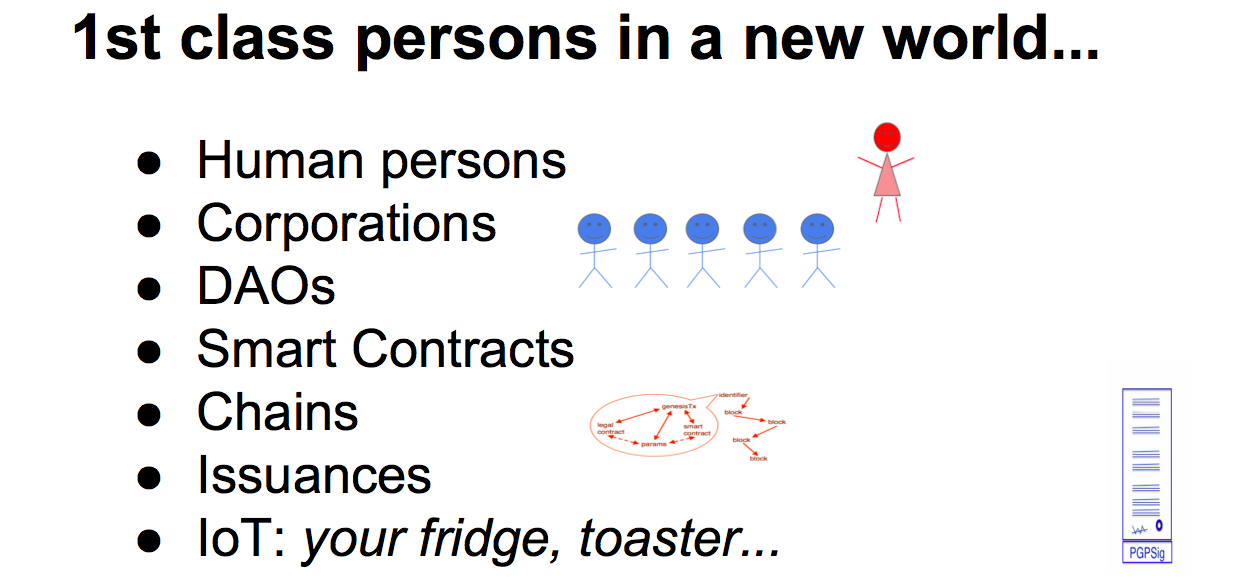

It looks like the other things, as it has the same elements. Where I'm coming to is that there is a list of First Class Persons in this new space that have the same set of characteristics. Humans, who do stuff, Corporations making decisions, DAOs making profits, smart contracts are making decisions based on inputs, chains are running and are persistent and supposed to be there. Issuances are supposed to serve your value-storing needs for quite some time. Internet of things - it might seem a little funny but your fridge is sitting there with a chip in it, running, and it can do things. It could run a smart contract, it could run a blockchain, it could do anything you like, it has these attributes, it could act as a first class person. |  |

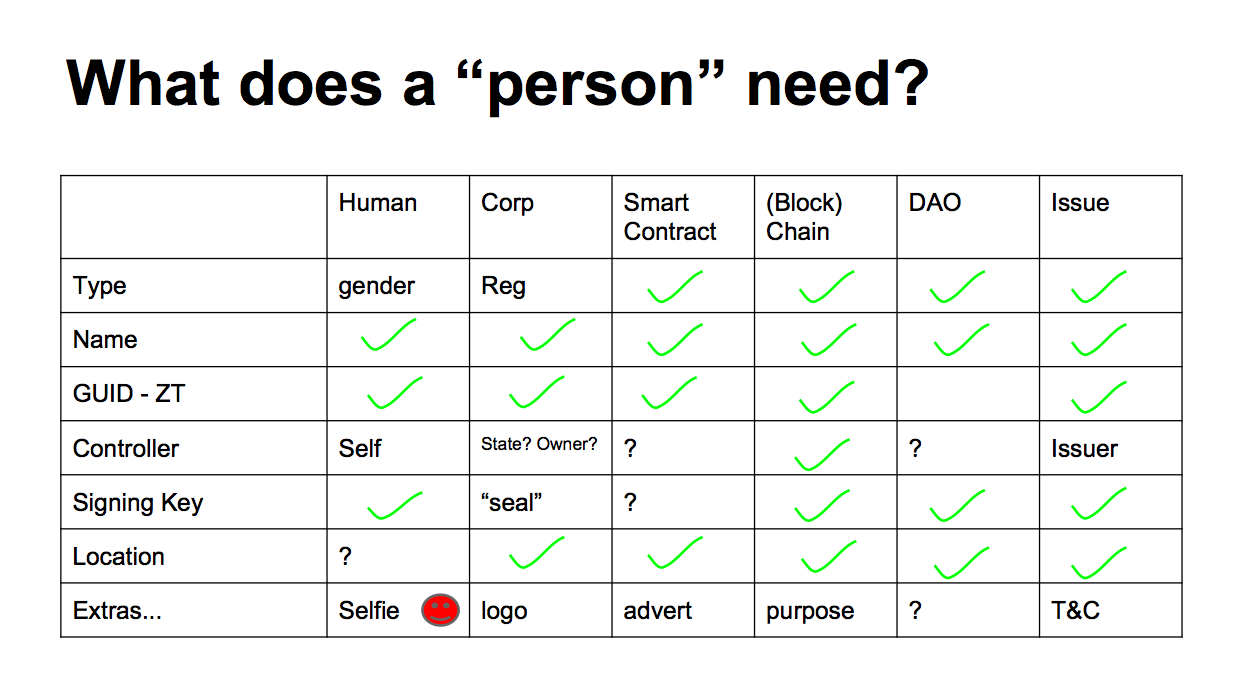

What do these first class persons need? If we line them up on the top axis, and run the characteristics down the left axis, it turns out that we can fill out the table and find that everything needs everything, most of the time. Sure, there are some exceptions, but that's life in the IT business. For the most part they all look the same. |  |

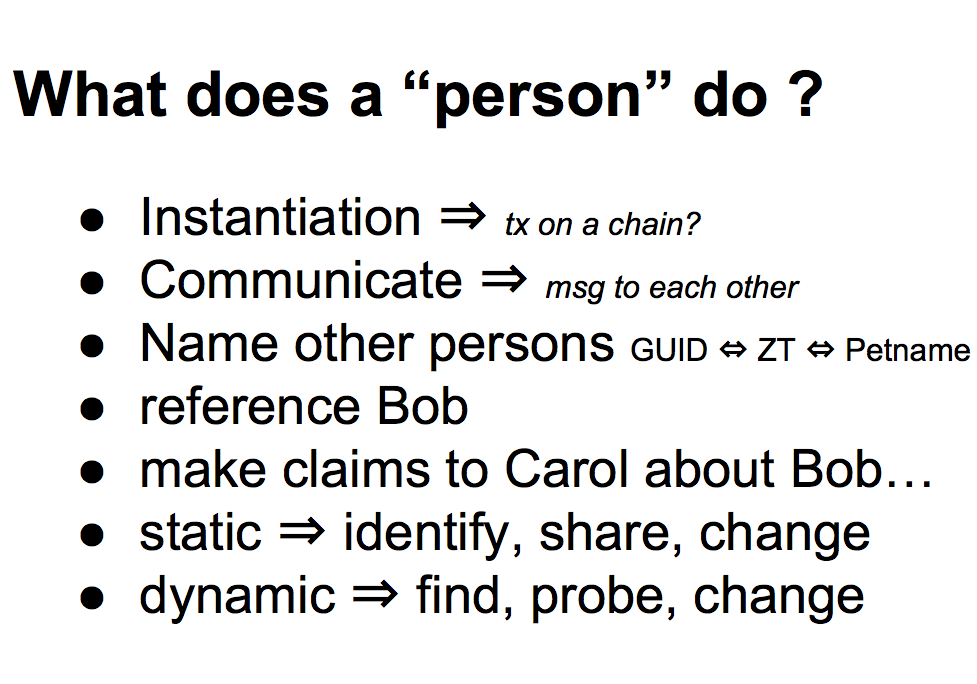

What do these First Class Persons do? Just quickly, they instantiate, they communicate, they name each other. Alice needs to send Bob's names to others, she needs to make claims about Bob, and send those claims onto Carol. As we're making some semblance of claim about these entities, the name has to be a decently good reference. Then there will be the normal IT stuff such as identify, share, change, blah blah. |  |

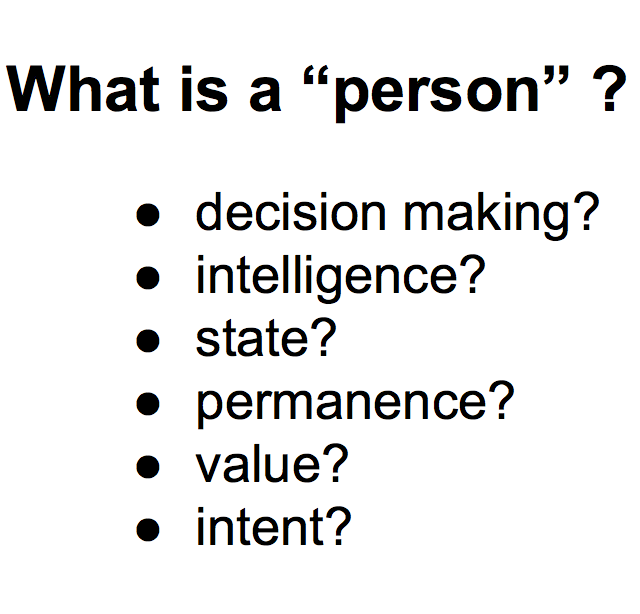

What then is a First Class Person? They have capabilities to make decisions, are apparently intelligent in some sense or other. They have state, they are holding value, they might be holding money or shares or photos, who knows? (Coming back to a question raised in the previous talk) they have intent, which is a legally significant word. |  |

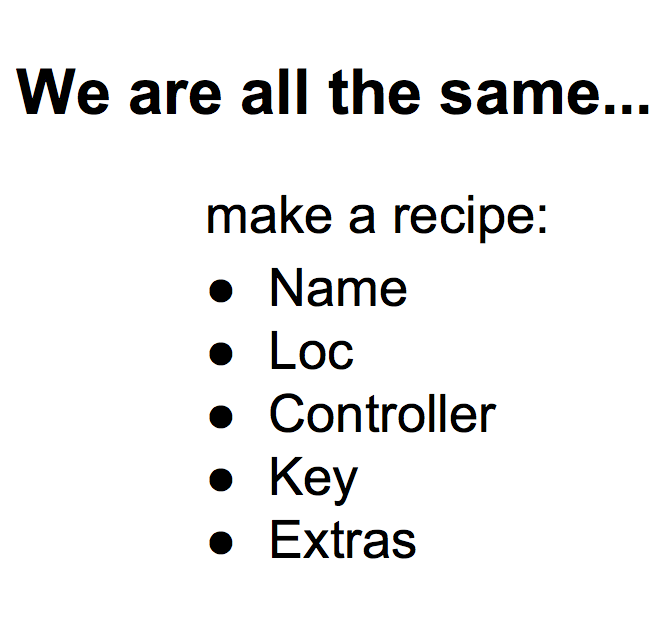

So we're all the same -- we people are the same as smart contracts, as block chains, etc, and we should be able to make recipe that describes us. We should be able to collect all these things together and make it such that we all look the same. |  |

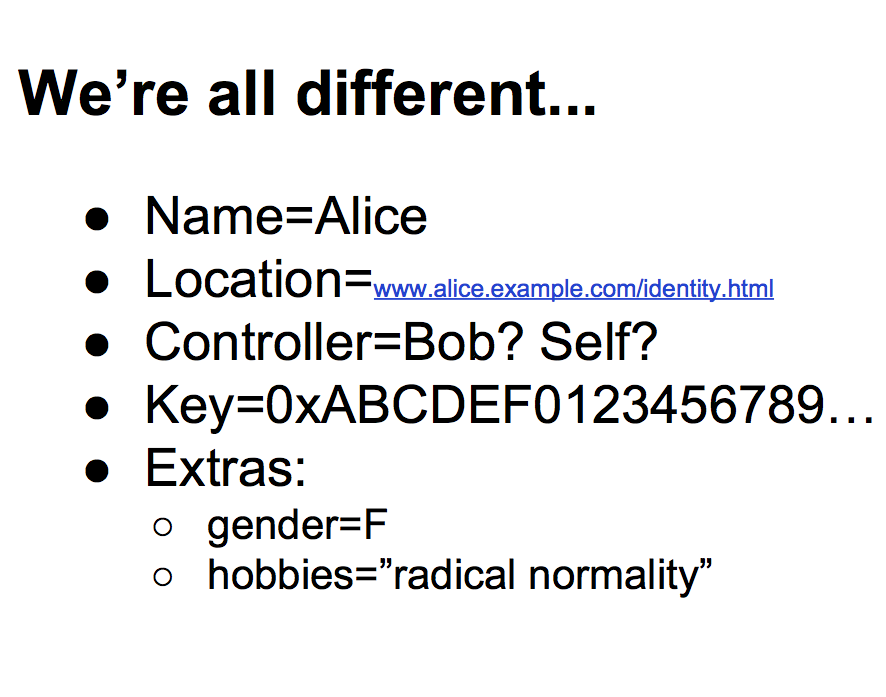

Of course we're all different - the fields in the recipes will all be different, but that's what we IT people know how to do. |  |

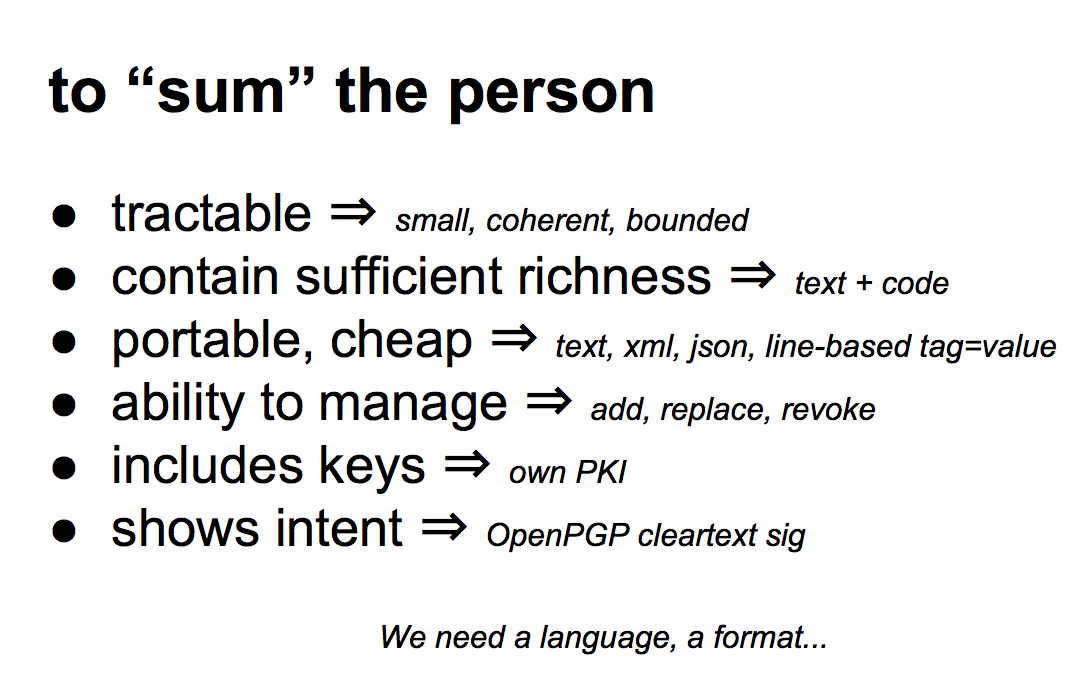

Although we can do this -- doing it isn't as easy as saying it. This package has to be tractable, it's got to be relatively small and powerful at the same time. It has to contain text and code at the same time which is a bit tricky. It has to be accessible and easy to implement, which probably means we don't want XML, it may mean we could do JSON, but I prefer line-based tag-equals-value because anyone can read it, nobody has to worry about it, and it's easy to write a parser. Other things: it has to include its own PKI, it cannot really resort to an external PKI because it needs to be in control of its own destiny which means it puts its root key inside itself in a sense more familiar to the OpenPGP people than the x.509 people. And it has to be able to sign things in order to show its intent. |  |

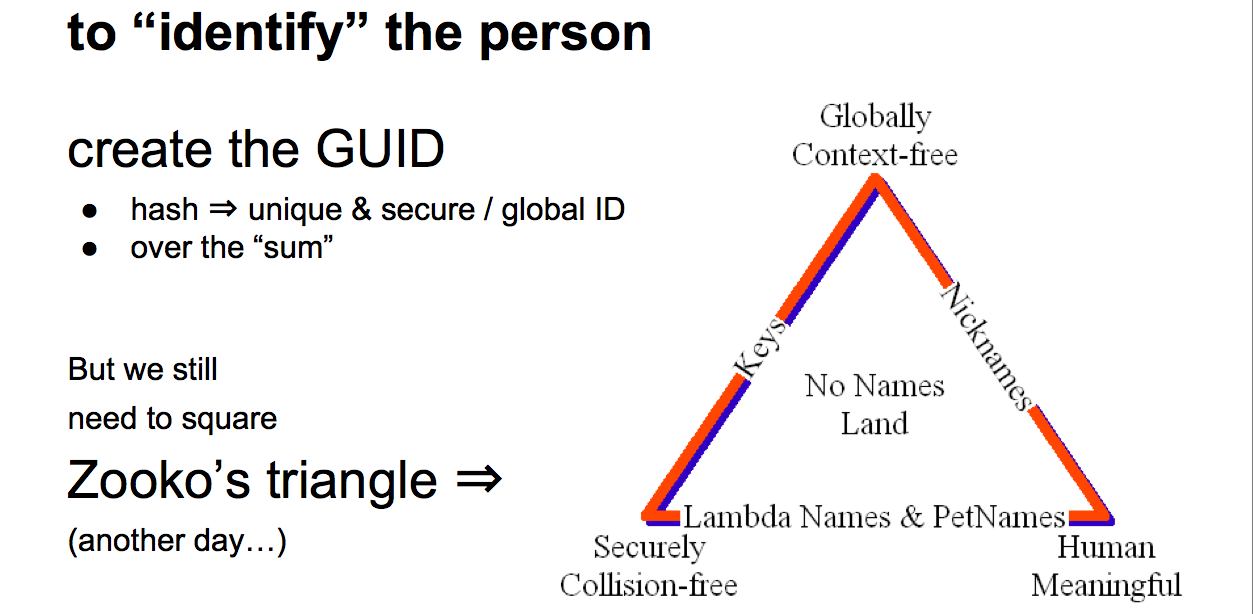

We need an identifier. The easy thing is to take a hash of the package that we've built up. But Zooko's Triangle which you really need to grok Zooko's Triangle to understand the space basically says that we can have an identifier that can have these attributes -- globally context free, securely collision free, human meaningful -- but you can only reach two of them with a single simple system. Hence we have to take the basic system we invent and then square the triangle to get the other attribute, but that's too much detail for this talk. |  |

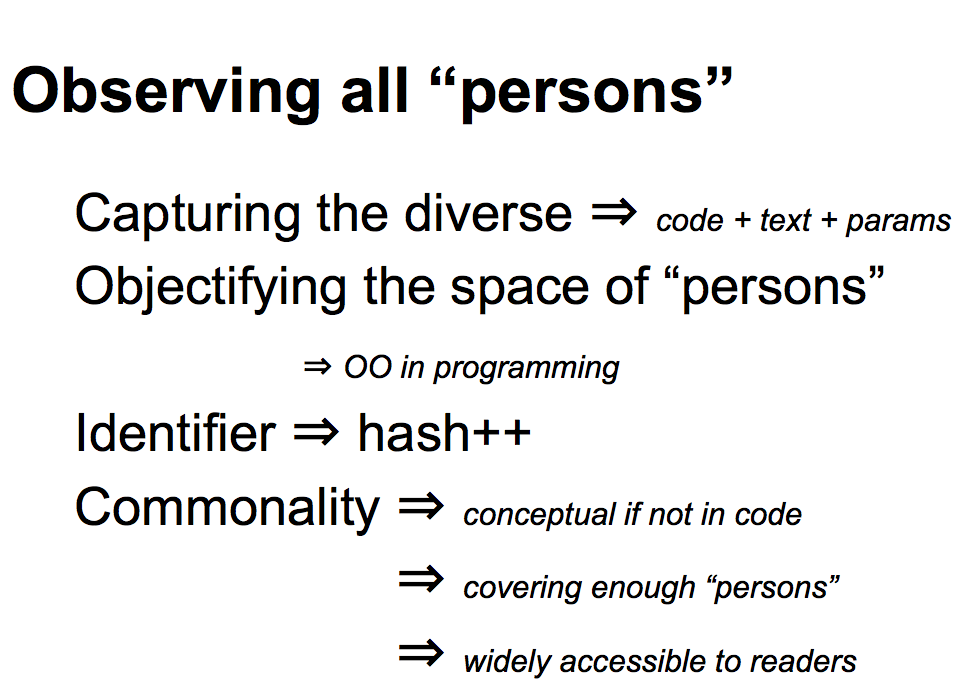

What are we seeing? We're capturing a lot of diversity and wrap it back into one arch-type. Capture the code, text, and params, make it work together and get it instantiated and then get the identifier out. The identifier is probably the easy part, although, you'll probably notice I'm skipping something here, which is what we do with that identifier once we've got it. In a sense what we are doing is objectifying and from an OO programming sense we are all objects and we just need to create the object arch-type in order to be able to manipulate all these First Class Persons. |  |

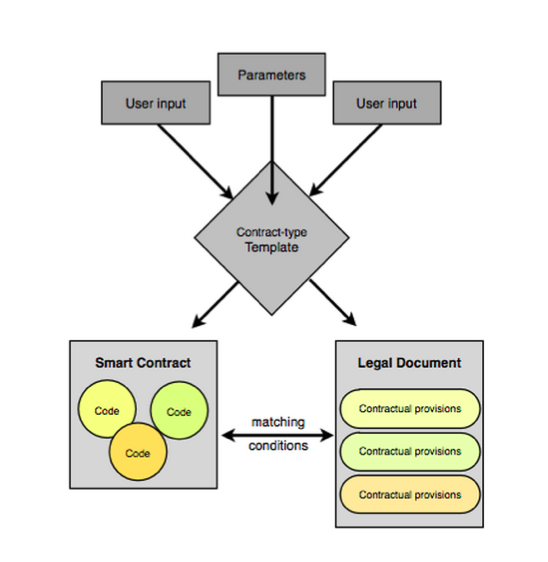

So what does it look like - another triangle containing a legal contract, parameters and a smart contract along the bottom. Those three elements are wrapped up and sent into a genesis transaction, into a something. That something might be a blockchain, might be a server, it might not even be what we think of as a transaction or a genesis, it looks approximately like that so I'm borrowing the buzzword. Out of that package we get an identifier! That is what it looks like today, but I admit it looked a little different yesterday and maybe next week it'll look different again. Some people on the net are already figuring this out. CommonAccord are doing legal contracts, wrapping them up into little tech objects, with a piece of code that they call an object and they have a matching legal document that relates to it. A user goes searching through their system looking for legal clauses, pull out the ones you want, compose them into a big contract. With each little legal clause you pull out, it comes with a bit of smart code, so you end up creating the whole legal text and the whole smart code in the same process. |  |

CommonAccord are basically doing the same thing described - wrapping together a smart contract with a legal document and then creating some form of identifier out of it when they produce their final contract. It's out there! |  |

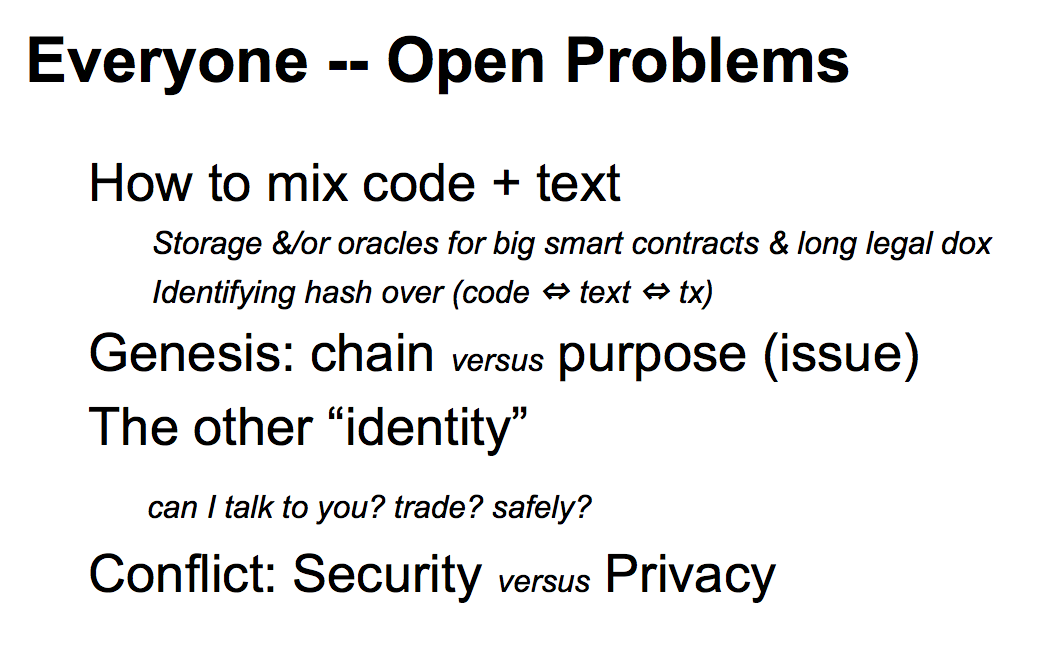

How do we do this? Open problems include how to mix the code and text. Both the code and the text can be very big, derivatives contracts can run to 300 pages. Hence some of the new generation blockchain projects have figured out they need to add file store in their architecture. |  |

We need to take the genesis concept and break it out - the genesis should start the chain not the currency, and we should start the currency using a different transaction. This is already done in some of these systems. Then there are is the "other identity" -- I haven't covered whether this is safe, whether you know the other person is who they claim to be, whether there is any recourse, or intent, or even a person behind an identity, or what? We really don't know any more than what the tech says, and (hand wave) that's a subject for another day. There is a conflict in that we're surfacing more and more information which is great for security as we're locking things down, but it does rather leave aside the question of Privacy? Are we ruining things for privacy? Another question for another day. |  |

References!

- Primavera de Filippi, Legal Framework For Crypto-Ledger Transactions

- Mark Friedenbach and Jorge Timón, FreiMarkets

- Grigg, The Ricardian Contract and On the intersection of Ricardian and Smart Contracts

- Nick Szabo, Smart Contracts

- Zooko Wilcox-OHearn, Names: Decentralized, Secure, Human-Memorizable: Choose Two

Addendum

December 21, 2014

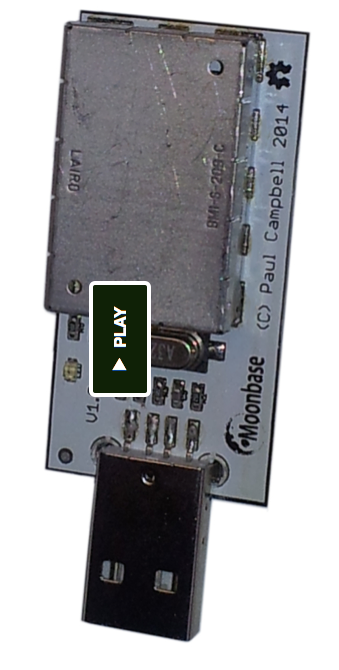

OneRNG -- open source design for your random numbers

Paul of Moonbase has put a plea onto kickstarter to fund a run of open RNGs. As we all know, having good random numbers is one of those devilishly tricky open problems in crypto. I'd encourage one and all to click and contribute.

Paul of Moonbase has put a plea onto kickstarter to fund a run of open RNGs. As we all know, having good random numbers is one of those devilishly tricky open problems in crypto. I'd encourage one and all to click and contribute.

For what it's worth, in my opinion, the issue of random numbers will remain devilish & perplexing until we seed hundreds of open designs across the universe and every hardware toy worth its salt also comes with its own open RNG, if only for the sheer embarrassment of not having done so before.

OneRNG is therefore massively welcome:

About this projectAfter Edward Snowden's recent revelations about how compromised our internet security has become some people have worried about whether the hardware we're using is compromised - is it? We honestly don't know, but like a lot of people we're worried about our privacy and security.

What we do know is that the NSA has corrupted some of the random number generators in the OpenSSL software we all use to access the internet, and has paid some large crypto vendors millions of dollars to make their software less secure. Some people say that they also intercept hardware during shipping to install spyware.

We believe it's time we took back ownership of the hardware we use day to day. This project is one small attempt to do that - OneRNG is an entropy generator, it makes long strings of random bits from two independent noise sources that can be used to seed your operating system's random number generator. This information is then used to create the secret keys you use when you access web sites, or use cryptography systems like SSH and PGP.

Openness is important, we're open sourcing our hardware design and our firmware, our board is even designed with a removable RF noise shield (a 'tin foil hat') so that you can check to make sure that the circuits that are inside are exactly the same as the circuits we build and sell. In order to make sure that our boards cannot be compromised during shipping we make sure that the internal firmware load is signed and cannot be spoofed.

OneRNG has already blasted through its ask of $10k. It's definitely still worth contributing more because it ensures a bigger run and helps much more attention on this project. As well, we signal to the world:

*we need good random numbers*

and we'll fight aka contribute to get them.

December 04, 2014

MITM watch - sitting in an English pub, get MITM'd

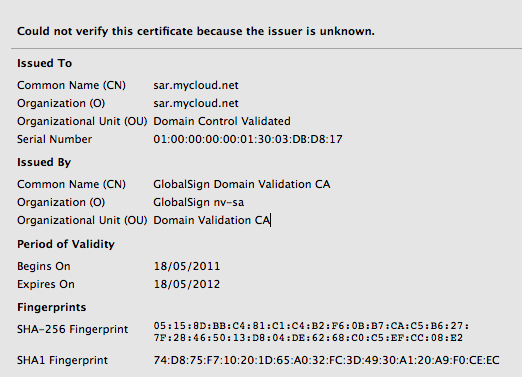

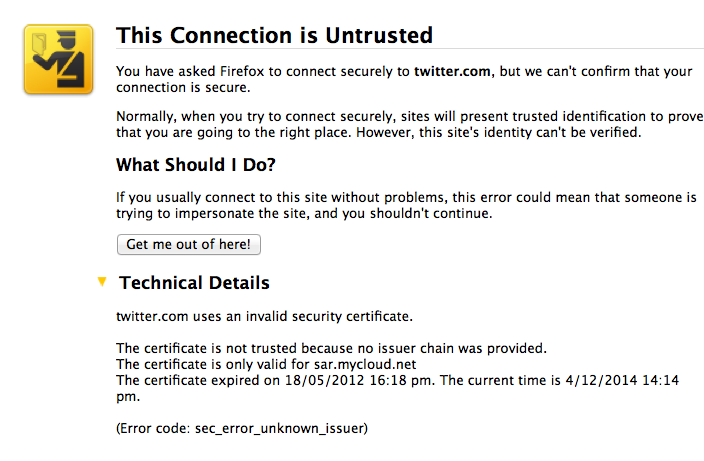

So, sitting in a pub idling till my 5pm, thought I'd do some quick check on my mail. Someone likes my post on yesterday's rare evidence of MITMs, posts a comment. Nice, I read all comments carefully to strip the spam, so, click click...

Boom, Firefox takes me through the wrestling trick known as MITM procedure. Once I've signalled my passivity to its immoral arrest of my innocent browsing down mainstreet, I'm staring at the charge sheet.

Whoops -- that's not financialcryptography.com's cert. I'm being MITM'd. For real!

Fully expecting an expiry or lost exception or etc, I'm shocked! I'm being MITM'd by the wireless here in the pub. Quick check on twitter.com who of course simply have to secure all the full tweetery against all enemies foreign and domestic and, same result. Tweets are being spied upon. The horror, the horror.

On reflection, the false positive result worked. One reason for that on the skeptical side is that, as I'm one of the 0.000001% of the planet that has wasted significant years on the business of protecting the planet against the MITM, otherwise known as the secure browsing model (queue in acronyms like CA, PKI, SSL here...), I know exactly what's going on.

How do I judge it all? I'm annoyed, disturbed, but still skeptical as to just how useful this system is. We always knew that it would pick up the false positive, that's how Mozilla designed their GUI -- overdoing their approach. As I intimated yesterday, the real problem is whether it works in the presence of a flood of false negatives -- claimed attacks that aren't really attacks, just normal errors and you should carry on.

Secondly, to ask: Why is a commercial process in a pub of all places taking the brazen step of MITMing innocent customers? My guess is that users don't care, don't notice, or their platforms are hiding the MITM from them. One assumes the pub knows why: the "free" service they are using is just raping their customers with a bit of secret datamining to sell and pillage.

Well, just another another data point in the war against the users' security.

June 15, 2014

Certicom fingered in conspiracy to insert back door in standards -- DUAL_EC patents!

In what is now a long running saga, we have more news on the DUAL_EC backdoor injected into the standards processes. In a rather unusual twist, it appears that Certicom's Dan Brown and Scott Vanstone attempted to patent the backdoor in Dual EC in or around January of 2005. From Tanja Lange & DJB:

... It has therefore been identified by the applicant that this method potentially possesses a trapdoor, whereby standardizers or implementers of the algorithm may possess a piece of information with which they can use a single output and an instantiation of the RNG to determine all future states and output of the RNG, thereby completely compromising its security.The provisional patent application also describes ideas of how to make random numbers available to "trusted law enforcement agents" or other "escrow administrators".

This appears to be before ANSI/NIST finished standardising DUAL_EC as a RNG, that is, during the process. **

Obviously one question arises -- is this a conspiracy between Certicom, NSA and NIST to push out a backdoor? Or is this just the normal incompetent-in-hindsight operations of the military-industrial-standards complex?

It's an important if conspiratorial question because we want to document the modus operandi of a spook intervention into a standards process. We'll have to wait for more facts; the participants will simply deny. One curious fact, the NSA recommended *against* a secrecy order for the patent.

What I'm more curious about today is Certicom's actions. What is the benefit to society and their customers in patenting a backdoor? How can they benefit in a way that aligns the interests of the Internet with the interests of their customers?

Or is this impossible to reconcile? If Certicom is patenting backdoors, the only plausible way I can think of this is that it intends to wield backdoors. Which means spying and hacking. Certicom is now engaged in the business of spying on ... customers? Foreign governments?

In contrast, I would have said that Certicom's responsibility as a participant in Internet security is to declare and damn an exploit, not bury it in a submarine patent.

If so, what idiot in Certicom's board put it on the path of becoming the Crypto AG of the 21st century?

If so, Certicom is now on the international blacklist of shame. Until questions are answered, do no business with them. Certicom have breached the sacred trust of trade -- to operate in the interests of their customers.

** Edited to remove this statement:

May 26, 2014

Why triple-entry is interesting: when accounting is the weapon of choice

Bill Black gave an interview last year on how the financial system has moved from robustness to criminogenia:

If you can steal with impunity, as soon as you devastate regulation, you devastate the ability to prosecute. And as soon as that happens, in our jargon, in criminology, you make it a criminogenic environment. It just means an environment where the incentives are so perverse that they are going to produce widespread crime. In this context, it is going to be widespread accounting control fraud. And we see how few ethical restraints remain in the most elite banks.You are looking at an underlying economic dynamic where fraud is a sure thing that will make people fabulously wealthy and where you select by your hiring, by your promotion, and by your firing for the ethically worst people at these firms that are committing the frauds.

No prizes for guessing he's talking about the financial system and the failure of the regulators to jail anyone, nor find any bank culpable, nor find any accounting firm that found any bank in trouble before it collapsed into the mercy of the public purse.

But where is the action? Where is the actual fraud taking place? This is the question that defies analysis and therefore allows the fraudsters to lay a merry trail of pointed fingers that curves around and joins itself. Here's the answer.

So in the financial sphere, we are mostly talking about accounting as the weapon of choice. And that is, where you overvalue assets, sometimes you undervalue liabilities. You create vast amounts of fictional income by making really bad loans if you are a lender. This makes you rich through modern executive compensation, and then it causes tremendous losses to the lender.

The first defence against this process is transparency. Which implies the robust availability of clear accounting records -- what really happened? Which is where triple-entry becomes much more interesting, and much more relevant.

In the old days, accounting was the domain of intra-firm transactions. Double entry enabled the growth of the business empire because internal errors could be eliminated by means of the double-links between separate books; clearly, money had to be either in one place or another, it couldn't slip between the cracks any more, so we didn't need to worry so much about external agents deliberately dropping a few entries.

Beyond the firm, it was caveat emptor. Which the world muddled along with for around 700 years until the development of electronic transactions. At this point of evolution from paper to electronic, we lost the transparency of the black & white, and we also lost the brake of inefficiency in transactions between firms. That which was on paper was evidence and accountable to an entire culture called accountants; that which was electronic was opaque except to a new generation of digital adepts.

Say hello to Nick Leeson, say good bye to Barings Bank. The fraud that was possible now exploded beyond imagination.

Triple-entry addresses this issue by adding cryptography to the accounting entry. In effect it locks the transaction into a single electronic record that is shared with three parties: the sender, the receiver and a third party to hold & adjudicate. Crypto makes it easy for them to hold the same entry, the third parties makes it easy to force the two interested agents not to play games.

You can see this concept with Bitcoin, which I suggest is a triple-entry system, albeit not one I envisaged. The transaction is held by the sender and the recipient of the currency, and the distributed blockchain plays the part of the third party.

Why is this governance arrangement a step forward? Look at say money laundering. Consider how you would launder funds through bitcoin, a fear claimed by the various government agencies. Simple, send your ill-gotten gains to some exchanger, push the resultant bitcoin around a bit, then cash out at another exchanger.

Simple, except every record is now locked into the blockchain -- the third party. Because it is cryptographic, it is now a record that an investigator can trace through and follow. You cannot hide, you cannot dive into the software system and fudge the numbers, you cannot change the records.

Triple-entry systems such as Bitcoin are so laughably transparent that only the stupidest money launderer would go there, and would therefore eliminate himself before long. It is fair to say that triple-entry is practically immunised against ML, and the question is not what to do about it in say Bitcoin, but why aren't the other systems adopting that technique?

And as for money laundering, so goes every other transaction. Transparency using triple-entry concepts has now addressed the chaos of inter-company financial relationships and restored it to a sensible accountable and governable framework. That which double-entry did for intra-company, triple-entry does for the financial system.

Of course, triple-entry does not solve everything. It's just a brick, we still need mortar of systems, the statics of dispute resolution, plans, bricklayers and all the other components. It doesn't solve the ethics failure in the financial system, it doesn't bring the fraudsters to jail.

And, it will take a long time before this idea of cryptographically sealed receipts seeps its way slowly into society. Once it gets hold, it is probably unstoppable because companies that show accounts solidified by triple-entry will eventually be rewarded by cheaper cost of capital. But that might take a decade or three.

________

H/t to zerohedge for this article of last year.

May 19, 2014

How to make scientifically verifiable randomness to generate EC curves -- the Hamlet variation on CAcert's root ceremony

It occurs to me that we could modify the CAcert process of verifiably creating random seeds to make it also scientifically verifiable, after the event. (See last post if this makes no sense.)

Instead of bringing a non-deterministic scheme, each participant could bring a deterministic scheme which is hitherto secret. E.g., instead of me using my laptop's webcam, I could use a Guttenberg copy of Hamlet, which I first declare in the event itself.

Another participant could use Treasure Island, a third could use Cien años de soledad.

As nobody knew what each other participate was going to declare, and the honest players amongst did a best-efforts guess on a new statically consistent tome, we can be sure that if there is at least one honest non-conspiring party, then the result is random.

And now verifiable post facto because we know the inputs.

Does this work? Does it meet all the requirements? I'm not sure because I haven't had time to think about it. Thoughts?

BADA55 or 5ADA55 -- we can verifiably create random numbers

The DJB & Tanje Lange team out of Technische Universiteit Eindhoven, Netherlands have produced a set of curves to challenge the notion of verifiable randomness. Specifically, they seem to be aiming at the Brainpool curves which had a stab at producing a new set of curves for elliptic curve cryptography (ECC).

Now, please note: if you don't understand ECC then don't worry, neither do I. But we do get to black box it like any *useful technology to society* and in that black boxing we might ask, nay, we must ask the question, where the seeds fairly chosen? Or,

Verifiably random parameters offer some additional conservative features. These parameters are chosen from a seed using SHA-1 as specified in ANSI X9.62 [X9.62]. This process ensures that the parameters cannot be predetermined. The parameters are therefore extremely unlikely to be susceptible to future special-purpose attacks, and no trapdoors can have been placed in the parameters during their generation. —Certicom SEC 2 2.0 (2010)

Which claim the team set out to challenge:

The name "BADA55" (pronounced "bad-ass") is explained by the appearance of the string BADA55 near the beginning of each BADA55 curve. This string is underlined in the Sage scripts above.We actually chose this string in advance and then manipulated the curve choices to produce this string. The BADA55-VR curves illustrate the fact that, as pointed out by Scott in 1999, "verifiably random" curves do not stop the attacker from generating a curve with a one-in-a-million weakness. The BADA55-VPR curves illustrate the fact that "verifiably pseudorandom" curves with "systematic" seeds generated from "nothing-up-my-sleeve numbers" also do not stop the attacker from generating a curve with a one-in-a-million weakness.

We do not assert that the presence of the string BADA55 is a weakness. However, with a similar computation we could have selected a one-in-a-million weakness and produced curves with that weakness. Suppose, for example, that twist attacks were not publicly known but were known to us; a random 224-bit curve has one chance in a million of being extremely twist-insecure (attack cost below 2^30), and we could have generated a curve with this property, while pretending that this was a "verifiable" curve generated for maximum security.

Which highlights two problems we have with all prior sets of curves: were the curves (seeds) chosen at random, and/or were they chosen to exploit weaknesses we did not know about? The crux here is that if someone does know of a weakness, they can re-run their "verifiably random" process until they get the results get want.

Is this realistic? Snowden says it is. The choosers of the main popular set of curves were the NSA & NIST, and as they ain't saying much other than to deny anything they've already been caught with, we have enough evidence to damn the NIST curves.

This is good stuff, BADA55 as a process highlights this very well. But:

We view the terminology "verifiably random" as deceptive. The claimed randomness (a uniform distribution) is not being verified; what is being verified is merely a hash computation. We similarly view the terminology "verifiably pseudorandom" and "nothing up my sleeves" as deceptive.

goes too far. They reproduced the process (presumably) and showed that it did not meet its own claimed standard, but did not explore how to create a fair seed. We do know how to do this, and there is an entire business case for it, it is the root of a CA. Which gives us at least two answers.

In the CA industry they suggest that hard tech problems be outsourced to a thing called a HSM or High Security Module. This is a hardware device that is strictly produced to the highest standards and testing to produce what we need. In this particular case, the generation of random numbers will be done in a HSM according to a NIST or equivalent standard, and tested according to their very harsh and expensive regimes.

That's the formal, popular, and safe answer, which most CAs use to pass audit [0]. Except, it creates a complicated expensive process which can be perverted by the NSA & friends, as alleged by the Snowden revelations.

At CAcert we did something different. Because we knew that the HSM process was suspect enough to be unreliable, and it had no apparent way to mitigate this risk, we developed our own. In short this is what we do:

- Several trusted people of the community come together, each bringing a random number source of their own choosing. From an example I participated in, I personally used my laptop's webcam on a low-light white cardboard to generate quantum artifacts from the pixcells under harsh conditions. This raw photo I piped through a SHA1.

- Each of these personal sources is mixed in with a small, simple custom-written program.

- Each person examines the custom written program to verify it does the job of generating a seed.

Wrap in some governance tricks such as reporting, observation, and construction of hardware & software on the spot with bog-standard components (for destruction later) and we have a complete process. Accepting the assumptions, this design ensures that the seed is random if at least one person has reliably delivered a good input.

Or so I claim: nothing up at least one person's sleeves means there isn't anything up our collective sleeve.

Granted, there are limitations to this process. /Verifiability/ is a direct part of the process, but it is limited to being there, on the day. Thereafter, we are limited to trusting the reports of those who were there. Hence, it isn't a repeatable experiment in the sense of scientific method, for that we'd need a bit more work.

But quibbles aside about the precise semantics of verifiability, I claim this is good enough for the job. Or, it is as good as it gets. If you combine the Eindhoven process with the CAcert process, then you'll get a set of curves that are reliably and verifiably secure to known current standards.

As good as it gets? If you do that, we'll need a new name for a better, badder set of curves; sadly I can only think of 5A1A55 for Fat-Ass right now.

[0] there is a discordance between the two goals here which I'm wafting past...

April 08, 2014

A very fast history of cryptocurrencies BBTC -- before Bitcoin

Before Bitcoin, there was cryptocurrency. Indeed, it has a long and deep history. If only for the lessons learnt, it is worth studying, and indeed, in my ABC of Bitcoin investing, I consider not knowing anything before the paper as a red flag. Hence, a very fast history of what came before (also see podcasts 1 and 2).

The first known (to me) attempt at cryptocurrencies occurred in the Netherlands, in the late 1980s, which makes it around 25 years ago or 20BBTC. In the middle of the night, the petrol stations in the remoter areas were being raided for cash, and the operators were unhappy putting guards at risk there. But the petrol stations had to stay open overnight so that the trucks could refuel.

Someone had the bright idea of putting money onto the new-fangled smartcards that were then being trialled, and so electronic cash was born. Drivers of trucks were given these cards instead of cash, and the stations were now safer from robbery.

At the same time the dominant retailer, Albert Heijn, was pushing the banks to invent some way to allow shoppers to pay directly from their bank accounts, which became eventually to be known as POS or point-of-sale.

Even before this, David Chaum, an American Cryptographer had been investigating what it would take to create electronic cash. His views on money and privacy led him to believe that in order to do safe commerce, we would need a token money that would emulate physical coins and paper notes. Specifically, the privacy feature of being able to safely pay someone hand-to-hand, and have that transaction complete safely and privately.

As far back as 1983 or 25BBTC, David Chaum invented the blinding formula, which is an extension of the RSA algorithm still used in the web's encryption. This enables a person to pass a number across to another person, and that number to be modified by the receiver. When the receiver deposits her coin, as Chaum called it, into the bank, it bears the original signature of the mint, but it is not the same number as that which the mint signed. Chaum's invention allowed the coin to be modified untraceably without breaking the signature of the mint, hence the mint or bank was 'blind' to the transaction.

All of this interest and also the Netherlands' historically feverish attitude to privacy probably had a lot to do with David Chaum's decision to migrate to the Netherlands. When working in the late 1980s at CWI, a hotbed of cryptography and mathematics research in Amsterdam, he started DigiCash and proceeded to build his Internet money invention, employing amongst many others names that would later become famous: Stefan Brands, Niels Ferguson, Gary Howland, Marcel "BigMac" van der Peijl, Nick Szabo, and Bryce "Zooko" Wilcox-Ahearn.

The invention of blinded cash was extraordinary and it caused an unprecedented wave of press attention. Unfortunately, David Chaum and his company made some missteps, and fell foul of the central bank (De Nederlandsche Bank or DNB). The private compromise that they agreed to was that Digicash's e-cash product would only be sold to banks. This accommodation then led the company on a merry dance attempting to field a viable digital cash through many banks, ending up eventually in bankruptcy in 1998. The amount of attention in the press brought very exciting deals to the table, with Microsoft, Deutsche Bank and others, but David Chaum was unable to use them to get to the next level.

On the coattails of Digicash there were hundreds of startups per year working on this space, including my own efforts. In the mid 1990s, the attention switched from Europe to North America for two factors: the Netscape IPO had released a huge amount of VC interest, and also Europe had brought in the first regulatory clampdown on digital cash: the 1994 EU Report on Prepaid Cards, which morphed into a reaction against DigiCash.

Yet, the first great wave of cryptocurrencies spluttered and died, and was instead overtaken by a second wave of web-based monies. First Virtual was a first brief spurt of excitement, to be almost immediately replaced by Paypal which did more or less the same thing.

The difference? Paypal allowed the money to go from person to person, where as FV had insisted that to accept money you must "be a merchant," which was a popular restriction from banks and regulators, but people hated it. Paypal also leapt forward by proposing its system as being a hand-to-hand cash, literally: the first versions were on the Palm Pilot, which was extraordinarily popular with geeks. But this geek-focus was quickly abandoned as Paypal discovered that what people -- real users -- really wanted was money on the web browser. Also, having found a willing userbase in ebay community, its future was more or less guaranteed as long as it avoided the bank/regulatory minefield laid out for it.

As Paypal proved the web became the protocol of choice, even for money, so Chaum's ideas were more or less forgotten in the wider western marketplace, although the tradition was alive in Russia with WebMoney, and there were isolated pockets of interest in the crypto communities. In contrast, several ventures started up chasing a variant of Paypal's web-hybrid: gold on the web. The company that succeeded initially was called e-gold, an American-based operation that had its corporation in Nevis in the Caribbean.

e-gold was a fairly simple idea: you send in your physical gold or 'junk' silver, and they would credit e-gold to your account. Or you could buy new e-gold, by sending a wire to Florida, and they would buy and hold the physical gold. By tramping the streets and winning customers over, the founder managed to get the company into the black and up and growing by around 1999. As e-gold the currency issuer was offshore, it did not require US onshore approval, and this enabled it for a time to target the huge American market of 'goldbugs' and also a growing worldwide community of Internet traders who needed to do cross-border payments. With its popularity on the increase, the independent exchange market exploded into life in 2000, and its future seemed set.

e-gold however ran into trouble for its libertarian ideal of allowing anyone to have an account. While in theory this is a fine concept, the steady stream of ponzis, HYIPs, 'games' and other scams attracted the attention of the Feds. In 2005, e-gold's Florida offices were raided and that was the end of the currency as an effective force. The Feds also proceeded to mop up any of the competitors and exchange operations they could lay their hands on, ensuring the end of the second great wave of new monies.

In retrospect, 9/11 marked a huge shift in focus. Beforehand, the USA was fairly liberal about alternative monies, seeing them as potential business, innovation for the future. After 9/11 the view switched dramatically, albeit slowly; all cryptocurrencies were assumed to be hotbeds of terrorists and drugs dealers, and therefore valid targets for total control. It's probably fair to speculate that e-gold didn't react so well to the shift. Meanwhile, over in Europe, they were going the other way. It had become abundantly clear that the attempt to shutdown cryptocurrencies was too successful, Internet business preferred to base itself in the USA, and there had never been any evidence of the bad things they were scared of. Successive generations of the eMoney law were enacted to open up the field, but being Europeans they never really understood what a startup was, and the less-high barriers remained deal killers.

Which brings us forward to 2008, and the first public posting of the Bitcoin paper by Satoshi Nakamoto.

What's all this worth? The best way I can make this point is an appeal to authority:

Satoshi Nakamoto wrote, on releasing the code: > You know, I think there were a lot more people interested in the 90's, > but after more than a decade of failed Trusted Third Party based systems > (Digicash, etc), they see it as a lost cause. I hope they can make the > distinction that this is the first time I know of that we're trying a > non-trust-based system.

Bitcoin is a result of history; when decisions were made, they rebounded along time and into the design. Nakamoto may have been the mother of Bitcoin, but it is a child of many fathers: David Chaum's blinded coins and the fateful compromise with DNB, e-gold's anonymous accounts and the post-9/11 realpolitik, the cypherpunks and their libertarian ideals, the banks and their industrial control policies, these were the whole cloth out of which Nakamoto cut the invention.

And, finally it must be stressed, most all successes and missteps we see here in the growing Bitcoin sector have been seen before. History is not just humming and rhyming, it's singing loudly.

April 06, 2014

The evil of cryptographic choice (2) -- how your Ps and Qs were mined by the NSA

One of the excuses touted for the Dual_EC debacle was that the magical P & Q numbers that were chosen by secret process were supposed to be defaults. Anyone was at liberty to change them.

Epic fail! It turns out that this might have been just that, a liberty, a hope, a dream. From last week's paper on attacking Dual_EC:

"We implemented each of the attacks against TLS libraries described above to validate that they work as described. Since we do not know the relationship between the NIST- specified points P and Q, we generated our own point Q′ by first generating a random value e ←R {0,1,...,n−1} where n is the order of P, and set Q′ = eP. This gives our trapdoor value d ≡ e−1 (mod n) such that dQ′ = P. (Our random e and its corresponding d are given in the Appendix.) We then modified each of the libraries to use our point Q′ and captured network traces using the libraries. We ran our attacks against these traces to simulate a passive network attacker.

In the new paper that measures how hard it was to crack open TLS when corrupted by Dual_EC, the authors changed the Qs to match the P delivered, so as to attack the code. Each of the four libraries they had was in binary form, and it appears that each had to be hard-modified in binary in order to mind their own Ps and Qs.

So did (a) the library implementors forget that issue? or (b) NIST/FIPS in its approval process fail to stress the need for users to mind their Ps and Qs? or (c) the NSA knew all along that this would be a fixed quantity in every library, derived from the standard, which was pre-derived from their exhaustive internal search for a special friendly pair? In other words:

"We would like to stress that anybody who knows the back door for the NIST-specified points can run the same attack on the fielded BSAFE and SChannel implementations without reverse engineering.

Defaults, options, choice of any form has always been known as bad for users, great for attackers and a downright nuisance for developers. Here, the libraries did the right thing by eliminating the chance for users to change those numbers. Unfortunately, they, NIST and all points thereafter, took the originals without question. Doh!

April 01, 2014

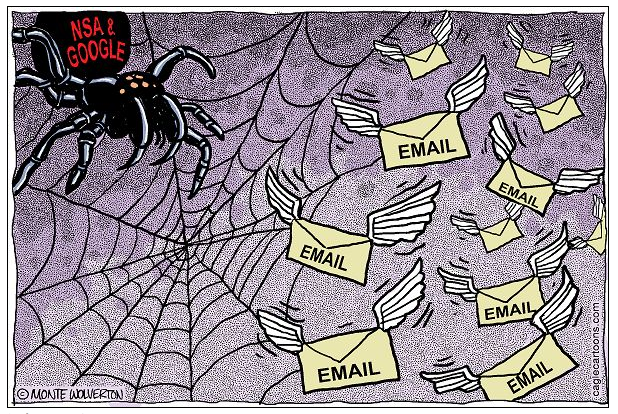

The IETF's Security Area post-NSA - what is the systemic problem?

In the light of yesterday's newly revealed attack by the NSA on Internet standards, what are the systemic problems here, if any?

I think we can question the way the IETF is approaching security. It has taken a lot of thinking on my part to identify the flaw(s), and not a few rants, with many and aggressive defences and counterattacks from defenders of the faith. Where I am thinking today is this:

First the good news. The IETF's Working Group concept is far better at developing general standards than anything we've seen so far (by this I mean ISO, national committees, industry cartels and whathaveyou). However, it still suffers from two shortfalls.

1. the Working Group system is more or less easily captured by the players with the largest budget. If one views standards as the property of the largest players, then this is not a problem. If OTOH one views the Internet as a shared resource of billions, designed to serve those billions back for their efforts, the WG method is a recipe for disenfranchisement. Perhaps apropos, spotted on the TLS list by Peter Gutmann:

Documenting use cases is an unnecessary distraction from doing actual work. You'll note that our charter does not say "enumerate applications that want to use TLS".

I think reasonable people can debate and disagree on the question of whether the WG model disenfranchises the users, because even though a a company can out-manouver the open Internet through sheer persistence and money, we can still see it happen. In this, IETF stands in violent sunlight compared to that travesty of mouldy dark closets, CABForum, which shut users out while industry insiders prepared the base documents in secrecy.

I'll take the IETF any day, except when...

2. the Working Group system is less able to defend itself from a byzantine attack. By this I mean the security concept of an attack from someone who doesn't follow the rules, and breaks them in ways meant to break your model and assumptions. We can suspect byzantium disclosures in the fingered ID:

The United States Department of Defense has requested a TLS mode which allows the use of longer public randomness values for use with high security level cipher suites like those specified in Suite B [I-D.rescorla-tls-suiteb]. The rationale for this as stated by DoD is that the public randomness for each side should be at least twice as long as the security level for cryptographic parity, which makes the 224 bits of randomness provided by the current TLS random values insufficient.

Assuming the story as told so far, the US DoD should have added "and our friends at the NSA asked us to do this so they could crack your infected TLS wide open in real time."

Such byzantine behaviour maybe isn't a problem when the industry players are for example subject to open observation, as best behaviour can be forced, and honesty at some level is necessary for long term reputation. But it likely is a problem where the attacker is accustomed to that other world: lies, deception, fraud, extortion or any of a number of other tricks which are the tools of trade of the spies.

Which points directly at the NSA. Spooks being spooks, every spy novel you've ever read will attest to the deception and rule breaking. So where is this a problem? Well, only in the one area where they are interested in: security.

Which is irony itself as security is the field where byzantine behaviour is our meat and drink. Would the Working Group concept past muster in an IETF security WG? Whether it does or no depends on whether you think it can defend against the byzantine attack. Likely it will pass-by-fiat because of the loyalty of those involved, I have been one of those WG stalwarts for a period, so I do see the dilemma. But in the cold hard light of sunlight, who is comfortable supporting a WG that is assisted by NSA employees who will apply all available SIGINT and HUMINT capabilities?

Can we agree or disagree on this? Is there room for reasonable debate amongst peers? I refer you now to these words:

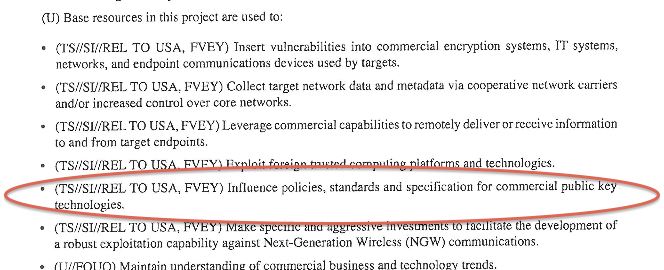

On September 5, 2013, the New York Times [18], the Guardian [2] and ProPublica [12] reported the existence of a secret National Security Agency SIGINT Enabling Project with the mission to “actively [engage] the US and foreign IT industries to covertly influence and/or overtly leverage their commercial products’ designs.” The revealed source documents describe a US $250 million/year program designed to “make [systems] exploitable through SIGINT collection” by inserting vulnerabilities, collecting target network data, and influencing policies, standards and specifications for commercial public key technologies. Named targets include protocols for “TLS/SSL, https (e.g. webmail), SSH, encrypted chat, VPNs and encrypted VOIP.”

The documents also make specific reference to a set of pseudorandom number generator (PRNG) algorithms adopted as part of the National Institute of Standards and Technology (NIST) Special Publication 800-90 [17] in 2006, and also standardized as part of ISO 18031 [11]. These standards include an algorithm called the Dual Elliptic Curve Deterministic Random Bit Generator (Dual EC). As a result of these revelations, NIST reopened the public comment period for SP 800-90.

And as previously written here. The NSA has conducted a long term programme to breach the standards-based crypto of the net.

As evidence of this claim, we now have *two attacks*, being clear attempts to trash the security of TLS and freinds, and we have their own admission of intent to breach. In their own words. There is no shortage of circumstantial evidence that NSA people have pushed, steered, nudged the WGs to make bad decisions.

I therefore suggest we have the evidence to take to a jury. Obviously we won't be allowed to do that, so we have to do the next best thing: use our collective wisdom and make the call in the public court of Internet opinion.

My vote is -- guilty.

One single piece of evidence wasn't enough. Two was enough to believe, but alternate explanations sounded plausible to some. But we now have three solid bodies of evidence. Redundancy. Triangulation. Conclusion. Guilty.

Where it leaves us is in difficulties. We can try and avoid all this stuff by e.g., avoiding American crypto, but it is a bit broader that that. Yes, they attacked and broke some elements of American crypto (and you know what I'm expecting to fall next.). But they also broke the standards process, and that had even more effect on the world.

It has to be said that the IETF security area is now under a cloud. Not only do they need to analyse things back in time to see where it went wrong, but they also need some concept to stop it happening in the future.

The first step however is to actually see the clouds, and admit that rain might be coming soon. May the security AD live in interesting times, borrow my umbrella?

March 31, 2014

NSA caught again -- deliberate weakening of TLS revealed!?

In a scandal that is now entertaining that legal term of art "slam-dunk" there is news of a new weakness introduced into the TLS suite by the NSA:

We also discovered evidence of the implementation in the RSA BSAFE products of a non-standard TLS extension called "Extended Random." This extension, co-written at the request of the National Security Agency, allows a client to request longer TLS random nonces from the server, a feature that, if it enabled, would speed up the Dual EC attack by a factor of up to 65,000. In addition, the use of this extension allows for for attacks on Dual EC instances configured with P-384 and P-521 elliptic curves, something that is not apparently possible in standard TLS.

This extension to TLS was introduced 3 distinct times through an open IETF Internet Draft process, twice by an NSA employee and a well known TLS specialist, and once by another. The way the extension works is that it increases the quantity of random numbers fed into the cleartext negotiation phase of the protocol. If the attacker has a heads up to those random numbers, that makes his task of divining the state of the PRNG a lot easier. Indeed, the extension definition states more or less that:

4.1. Threats to TLS

When this extension is in use it increases the amount of data that an attacker can inject into the PRF. This potentially would allow an attacker who had partially compromised the PRF greater scope for influencing the output.

The use of Dual_EC, the previously fingered dodgy standard, makes this possible. Which gives us 2 compromises of the standards process that when combined magically work together.

Our analysis strongly suggests that, from an attacker's perspective, backdooring a PRNG should be combined not merely with influencing implementations to use the PRNG but also with influencing other details that secretly improve the exploitability of the PRNG.

Red faces all round.

February 10, 2014

Bitcoin Verification Latency -- MtGox hit by market timing attack, squeezed between the water of impatience and the rock of transactional atomicity

Fresh on the heels of our release of "Bitcoin Verification Latency -- The Achilles Heel for Time Sensitive Transactions" it seems that Mt.Gox has been hit by exactly that - a market timing attack based on latency. In their own words:

Non-technical Explanation:A bug in the bitcoin software makes it possible for someone to use the Bitcoin network to alter transaction details to make it seem like a sending of bitcoins to a bitcoin wallet did not occur when in fact it did occur. Since the transaction appears as if it has not proceeded correctly, the bitcoins may be resent. MtGox is working with the Bitcoin core development team and others to mitigate this issue.

Technical Explanation:

Bitcoin transactions are subject to a design issue that has been largely ignored, while known to at least a part of the Bitcoin core developers and mentioned on the BitcoinTalk forums. This defect, known as "transaction malleability" makes it possible for a third party to alter the hash of any freshly issued transaction without invalidating the signature, hence resulting in a similar transaction under a different hash. Of course only one of the two transactions can be validated. However, if the party who altered the transaction is fast enough, for example with a direct connection to different mining pools, or has even a small amount of mining power, it can easily cause the transaction hash alteration to be committed to the blockchain.

The bitcoin api "sendtoaddress" broadly used to send bitcoins to a given bitcoin address will return a transaction hash as a way to track the transaction's insertion in the blockchain.

Most wallet and exchange services will keep a record of this said hash in order to be able to respond to users should they inquire about their transaction. It is likely that these services will assume the transaction was not sent if it doesn't appear in the blockchain with the original hash and have currently no means to recognize the alternative transactions as theirs in an efficient way.This means that an individual could request bitcoins from an exchange or wallet service, alter the resulting transaction's hash before inclusion in the blockchain, then contact the issuing service while claiming the transaction did not proceed. If the alteration fails, the user can simply send the bitcoins back and try again until successful.

Which all means what? Well, it seems that while waiting on a transaction to pop out of the block chain, one can rely on a token to track it. And so can ones counterparty. Except, this token was not exactly constructed on a security basis, and the initiator of the transaction can break it, leading to two naive views of the transaction. Which leads to some game-playing.

Let's be very clear here. There are three components to this break: Latency, impatience, and a bad token. Latency is the underlying physical problem, also known as the coordination problem or the two-generals problem. At a deeper level, as latency on a network is a physical certainty limited by the speed of light, there is always an open window of opportunity for trouble when two parties are trying to agree on anything.

In fast payment systems, that window isn't a problem for humans (as opposed to algos), as good payment systems clear in less than a second, sometimes known as real time. But not so in Bitcoin; where the latency is from 5 minutes and up to 120 depending on your assumptions, which leaves an unacceptable gap between the completion of the transaction and the users' expectations. Hence the second component: impatience.

The 'solution' to the settlement-impatience problem then is the hash token that substitutes as a final (triple entry) evidentiary receipt until the block-chain settles. This hash or token used in Bitcoin is broken, in that it is not cryptographically reliable as a token identifying the eventual settled payment.

Obviously, the immediate solution is to fix the hash, which is what Mt.Gox is asking Bitcoin dev team to do. But this assumes that the solution is in fact a solution. It is not. It's a hack, and a dangerous one. Let's go back to the definition of payments, again assuming the latency of coordination.

A payment is initiated by the controller of an account. That payment is like a cheque (or check) that is sent out. It is then intermediated by the system. Which produces the transaction.

But as we all know with cheques, a controller can produce multiple cheques. So a cheque is more like a promise that can be broken. And as we all know with people, relying on the cheque alone isn't reliable enough by and of itself, so the system must resolve the abuses. That fundamental understanding in place, here's what Bitcoin Foundation's Gavin Andresen said about Mt.Gox:

The issues that Mt. Gox has been experiencing are due to an unfortunate interaction between Mt. Gox’s implementation of their highly customized wallet software, their customer support procedures, and their unpreparedness for transaction malleability, a technical detail that allows changes to the way transactions are identified.Transaction malleability has been known about since 2011. In simplest of terms, it is a small window where transaction ID’s can be “renamed” before being confirmed in the blockchain. This is something that cannot be corrected overnight. Therefore, any company dealing with Bitcoin transactions and have coded their own wallet software should responsibly prepare for this possibility and include in their software a way to validate transaction ID’s. Otherwise, it can result in Bitcoin loss and headache for everyone involved.

Ah. Oops. So it is a known problem. So one could make a case that Mt.Gox should have dealt with it, as a known bug.

But note the language above... Transaction malleability? That is a contradiction in terms. A transaction isn't malleable, the very definition of a transaction is that it is atomic, it is or it isn't. ACID for those who recall the CS classes: Atomic, consistent, independent, durable.

Very simply put, that which is put into the beginning of the block chain calculation cycle /is not a transaction/ whereas that which comes out, is, assuming a handwavy number of 10m cycles such as 6. Therefore, the identifier to which they speak cannot be a transaction identifier, by definition. It must be an identifier to ... something else!

What's happening here then is more likely a case of cognitive dissonance, leading to a regrettable and unintended deception. Read Mt.Gox's description above, again, and the reliance on the word becomes clearer. Users have known to demand transactions because we techies taught them that transactions are reliable, by definition; Bitcoin provides the word but not the act.

So the first part of the fix is to change the words back to ones with reliable meanings. You can't simply undefine a term that has been known for 40 years, and expect the user community to follow.

(To be clear, I'm not suggesting what the terms should be. In my work, I simply call what goes in a 'Payment', and what comes out a 'Receipt'. The latter Receipt is equated to the transaction, and in my lesson on triple entry, I often end with a flourish: The Receipt is the Transaction. Which has more poetry if you've experienced transactional pain before, and you've read the whole thing. We all have our dreams :)

We are still leaves the impatience problem.

Note that this will also affect any other crypto-currency using the same transaction scheme as Bitcoin.Conclusion

To put things in perspective, it's important to remember that Bitcoin is a very new technology and still very much in its early stages. What MtGox and the Bitcoin community have experienced in the past year has been an incredible and exciting challenge, and there is still much to do to further improve.

When we did our early work in this, we recognised that the market timing attack comes from the implicit misunderstanding of how latency interferes with transactions, and how impatience interferes with both of them. So in our protocols, there is no 'token' that is available to track a pending transaction. This was a deliberate, early design decision, and indeed the servers still just dump and ignore anything they don't understand in order to force the clients away from leaning on unreliable crutches.

It's also the flip side of the triple-entry receipt -- its existence is the full evidence, hence, the receipt is the transaction. Once you have the receipt, you're golden, if not, you're in the mud.

But Bitcoin had a rather extraordinary problem -- the distribution of its consensus on the transaction amongst any large group of nodes that wanted to play. Which inherently made transactional mechanics and latency issues blow out. This is a high price to pay, and only history is going to tell us whether the price is too high or affordable.

January 30, 2014

Hard Truths about the Hard Business of finding Hard Random Numbers

Editorial note: this rant was originally posted here but has now moved to a permanent home where it will be updated with new thoughts.