January 26, 2020

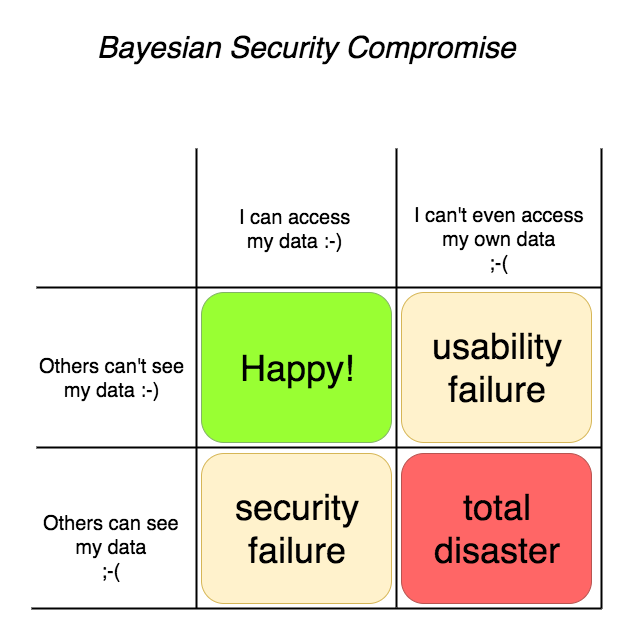

Bayesian Security Compromise

Watching an argument (I started) (on the Internet) about Apple dropping iCloud encryption (allegedly) reminded me of how hard it is to get security & privacy right.

What had actually happened was that Apple had made it possible for you to back up securely to iCloud and leave a key there, so if you got locked out, Apple could help you get your access back. Usability to the fore!

But some were surprised at this - I know I was. So how do you get it your way? The details are complicated, take several pages and clearly most will not follow the instructions or get it how they want without help.

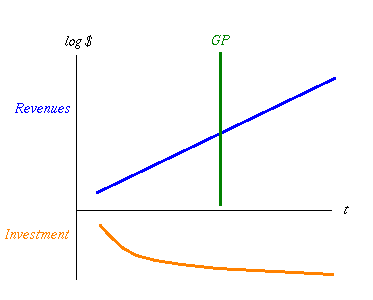

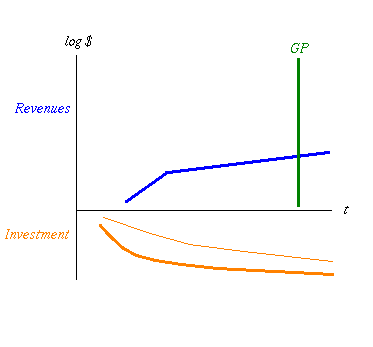

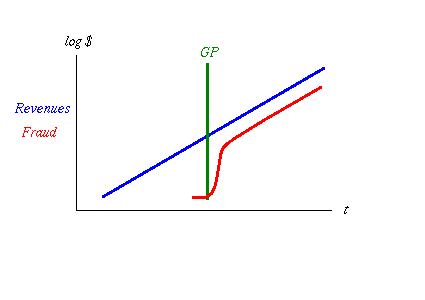

Keeping access to your own data is *important*! Keeping others out of your data is also important. And these two are in collision, tension, across the yellow diagonal above.

Strangely, it has a Bayesian feel to it - there is a strong link like that between the "false positives" and the "false negatives" such that if you dial one down, you actually dial the other up! Bayesian statistics tells you where on the compromise you are, and whether you've blown out the model by being too tight in one particular axis.

When you get to scale such that your user base includes people of all persuasions and security & privacy preferences, it might be that you're stuck in that compromise - you can save most of the people most of the time, so an awful lot depends on what the most of your users are like.

(Note the discord with there is only one mode, and it is secure.)

November 23, 2019

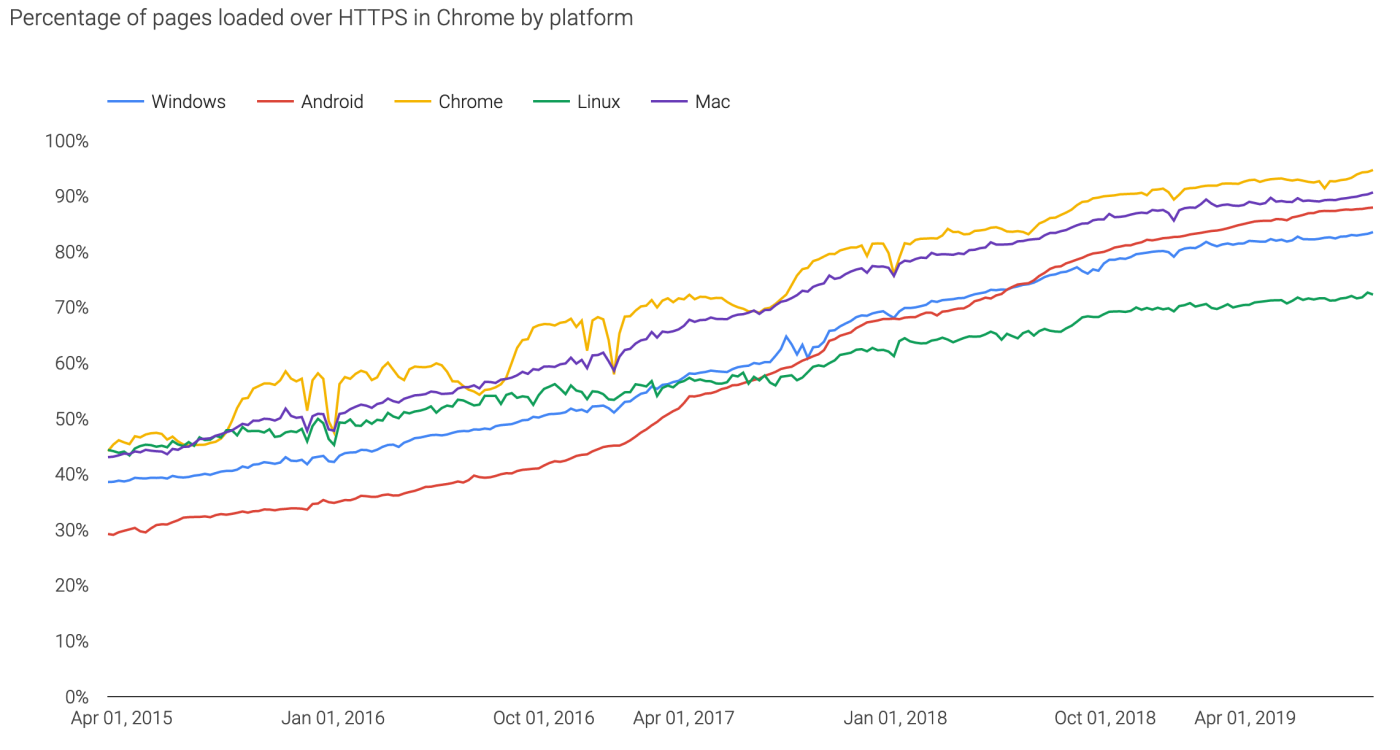

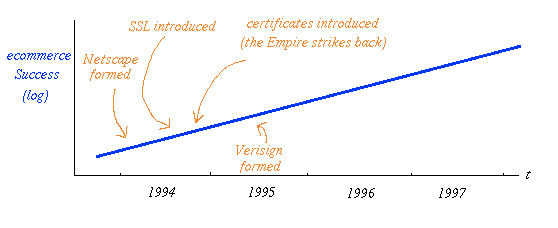

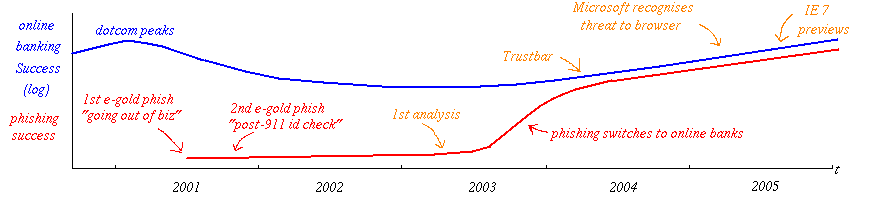

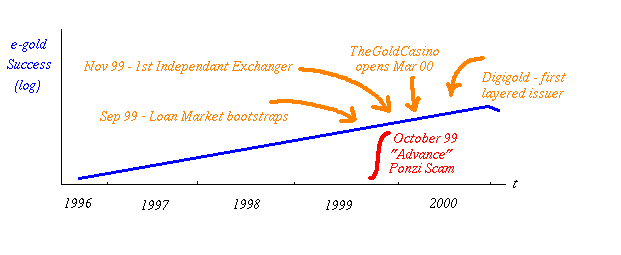

HTTPS reaches 80% - mission accomplished after 14 years

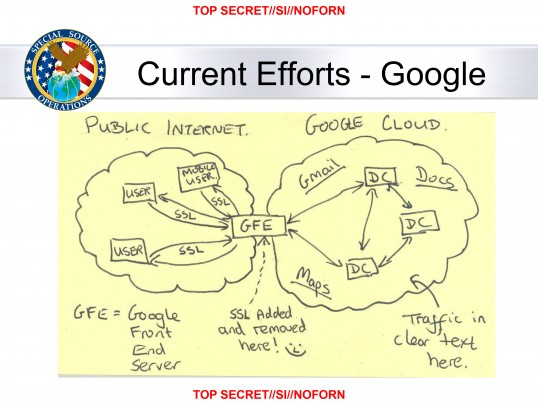

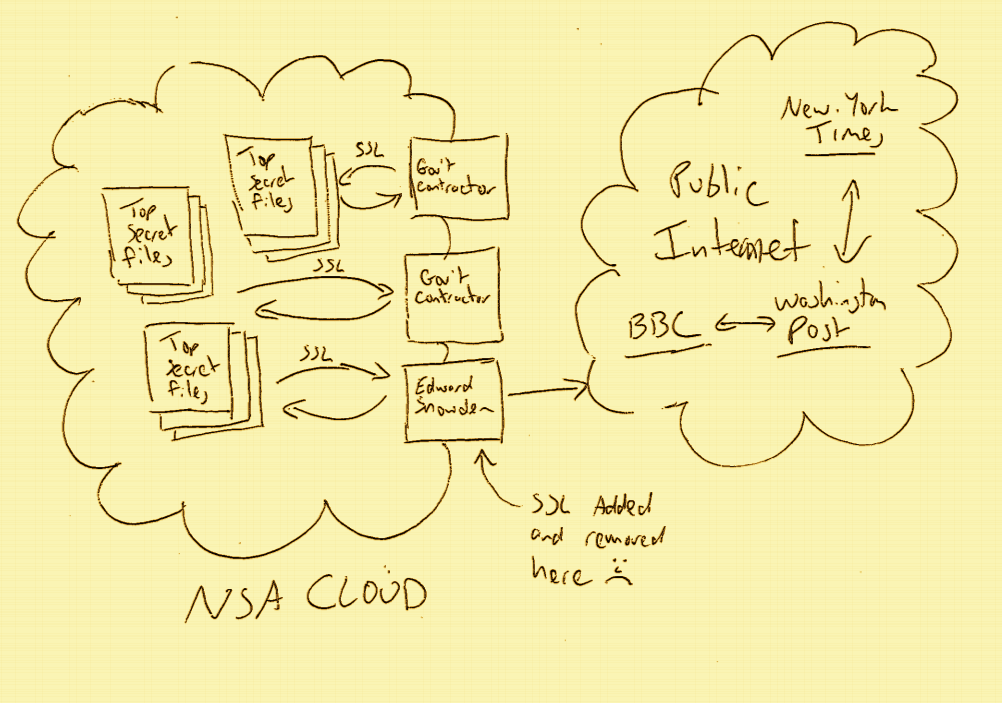

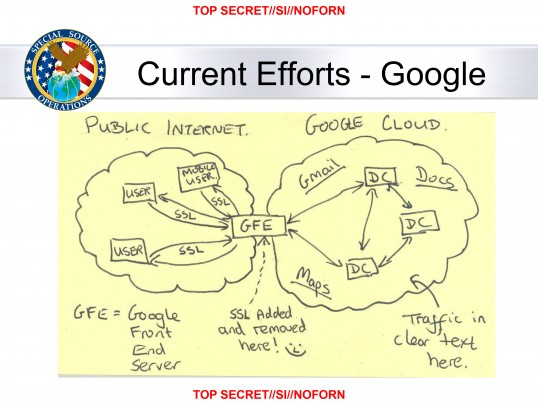

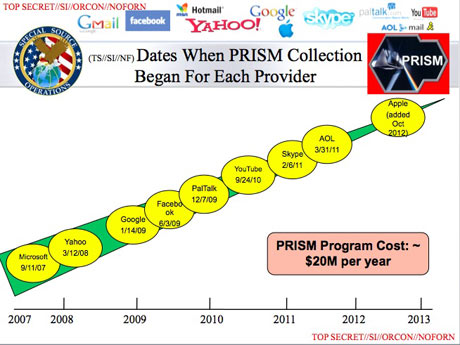

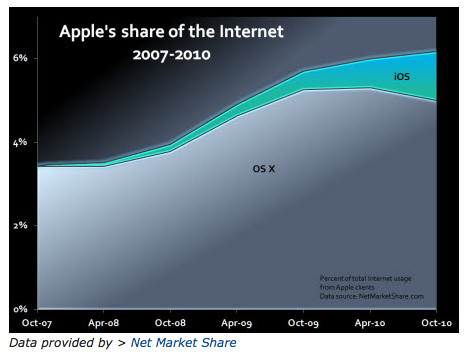

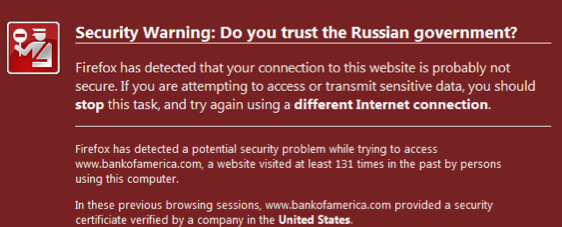

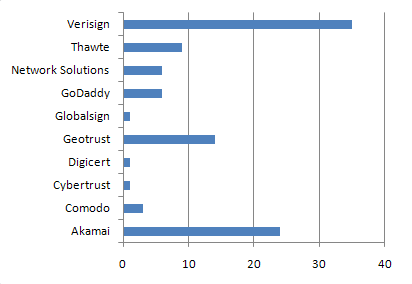

A post on Matthew Green's blog highlights that Snowden revelations helped the push for HTTPS everywhere.

Firefox also has a similar result, indicating a web-wide world result of 80%.

(It should be noted that google's decision to reward HTTPS users by prioritising it in search results probably helped more than anything, but before you jump for joy at this new-found love for security socialism, note that it isn't working too well in the fake news department.)

The significance of this is that back in around 2005 some of us first worked out that we had to move the entire web to HTTPS. Logic at the time was:

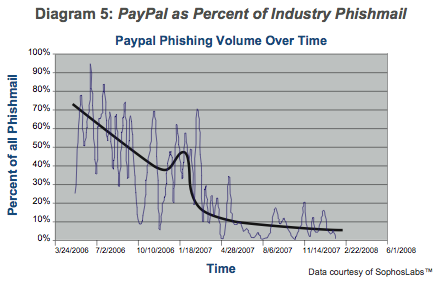

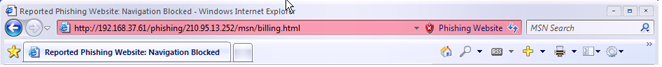

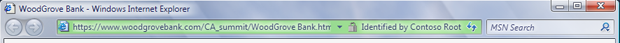

Why is this important? Why do we care about a small group of sites are still running SSL v2. Here's why - it feeds into phishing:1. In order for browsers to talk to these sites, they still perform the SSL v2 Hello. 2. Which means they cannot talk the TLS hello. 3. Which means that servers like Apache cannot implement TLS features to operate multiple web sites securely through multiple certificates. 4. Which further means that the spread of TLS (a.k.a. SSL) is slowed down dramatically (only one protected site per IP number - schlock!), and 5, this finally means that anti-phishing efforts at the browser level haven't a leg to stand on when it comes to protecting 99% of the web.Until *all* sites stop talking SSL v2, browsers will continue to talk SSL v2. Which means the anti-phishing features we have been building and promoting are somewhat held back because they don't so easily protect everything.

For the tl;dr: we can't protect the web when HTTP is possible. Having both HTTP and HTTPS as alternatives broke the rule: there is only one mode, and it is secure, and allowed attackers like phishers to just use HTTP and *pretend* it was secure.

The significance of this for me is that, from that point of time until now, we can show that a typical turn around the OODA loop (observe, orient, decide, act) of Information Security Combat took about 14 years. Albeit in the Internet protocol world, but that happens to be a big part of it.

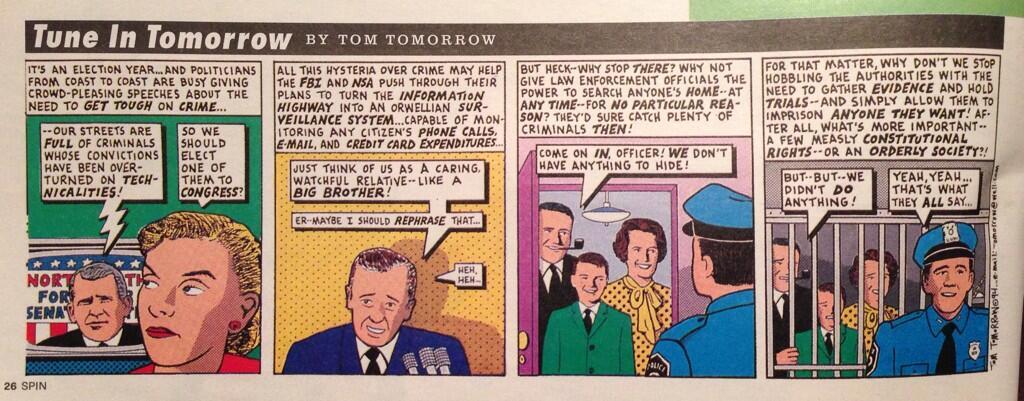

During that time, a new bogeyman turned up - the NSA listening to everything - but that's ok. Decent security models should cover multiple threats, and we don't so much care which threat gets us to a comfortable position.

March 09, 2019

PKI certs are a joke, edition 2943

From the annals of web research:

A thriving marketplace for SSL and TLS certificates...exists on a hidden part of the Internet, according to new research by Georgia State University's Evidence-Based Cybersecurity Research Group (EBCS) and the University of Surrey. ....When these certificates are sold on the darknet, they are packaged with a wide range of crimeware that delivers machine identities to cybercriminals who use them to spoof websites, eavesdrop on encrypted traffic, perform attacks and steal sensitive data, among other activities.

This is a direct consequence of certificate manufacturing, which is a direct consequence of the decision by browser vendors to downgrade the UX for security from essential to invisible.

I don't disagree with that last part as a strategy because as I wrote a long time ago, education is worse than useless. But the consequence of certificate manufacturing follows directly because if the certs can't be seen, they can't be valuable. And if they're not valuable, we need the lowest cost pipeline.

And lowest cost means zero. Which means they are confetti, and Let'sEncrypt has the right model. The unfortunate conclusion of this is that if certs are confetti we should not be selling them, we should be self-creating them. But the cartel got its death grip on the browser vendors, and so the unfortunate trade in pricey worthless certs continues.

This is all water under the bridge. But it is an interesting security question: how long will it take for the wider industry to strip out the broken model and replace it entirely? I vaguely recall that HTTPS2 adopted certificates, and as that is the hot new thing of the future, we'll probably need another two decades. Chance missed, let's all go long on phishing.

"One very interesting aspect of this research was seeing TLS certificates packaged with wrap-around services—such as Web design services—to give attackers immediate access to high levels of online credibility and trust," he said. "It was surprising to discover how easy and inexpensive it is to acquire extended validation certificates, along with all the documentation needed to create very credible shell companies without any verification information."

Yup, and surprisingly easy" extended validation for crooks is another consequence. Without a systemic approach to user security, it's doomed, and EV and other tricks are just fiddling around while Rome and her citizens burn.

January 29, 2019

How does the theory of terrorism stack up against AML? Badly - finally a case in Kenya: Dusit

Finally, an actual financial system & terrorism case lands before the courts, relating to the Dusit attack. Is this a world first? I don't know because this conjunction is so rare, nobody's tracking it.

The essential gripe is that since 9/11 the financial world decided to slap the terrorism label on their compliance process. Yet to no avail. Very few cases, so small that they fall between bayesian cracks. So misdirected because terrorists have options, and they can adjust their approach to slip under they radar. Backfiring because the terrorists are already outside norms and will do as much damage as needed, thus further harming the financial system.

And so hopeless because your true terrorist doesn't care about being caught afterwards - he's either dead or sacrificed.

Anyway, that's the theory - anti-terrorism applied to the financial system simply won't work. Let's see how the theory stacks against the evidence.

A suspect linked to the Dusit terror attack received Sh9 million from South Africa in three months and sent it to Somalia, the Anti-Terror Police Unit have said. Twenty one people, including a GSU officer, were killed in the January 15 attack. The cash was received through M-Pesa.

So far so good. We have about $90,000 (100 Kenya shillings is 1 USD) sent through M-Pesa, a mobile money system in Kenya, allegedly related to the Dusit attack.

Hassan Abdi Nur has 52 M-Pesa agent accounts. Fourty seven were registered between October and December last year, each with a SIM card. He used different IDs to register the SIM cards.

So (1), the theory of terrorism predicts that the money will be moved safely, whatever the cost. We have a match. In order to move the money, 52 accounts were opened, at the cost of different IDs.

One curiosity here is the cost. In my long running series on the Cost of your Identity Theft we see (or I suggest) an average cost of an Identity set of around $1000. Which would amount to a cost of $52k for 50 odd sets. But this is high for a washed amount of $90k.

Either the terrorists don't care of the cost, or cost of dodgy ID is lower in Kenya, or the alleged middleman amortised the cost over other deals. Interesting for further investigation but not germane to this case.

Then (2), the theory of bayesian statistics and the "base rate fallacy" predict that no terrorists will ever be caught before the fact based on AML/KYC controls.

Clearly this is a match - the evidence is being compiled from after-the-fact forensics. Now, in this the Kenyan authorities are to be applauded for coming out and actually revealing what's going on. In the western world, there is too much of a tendency to hide behind "national secrets" and thus render outside scrutiny, the democratic imperative, an impossibility. One up for the Kenyans, let's keep this investigation transparent and before the courts.

Next (3). The theory predicts that follow the money is a useless tool.

Ambitham was in constant communication with slain lead attacker Ali Salim Gichunge, who died during the attack and his spouse Violent Kemunto Omwoyo.[Inspector] Githaiga yesterday said Ambitham’s phone led to his arrest on Tuesday after detectives established his communication with the Gichunges.

The police are following the social graph and arresting anyone involved. Having traced the phones, they then investigated the M-Pesa evidence, which provided many additional and interesting confirmatory facts.

Which is what they should do. But it was the contact information that cracked this case, not the financial flows. The contact information has always been available to them. And, where there is a credible case of terrorism as is in this case, the financial information has never been withheld. Again, the theory matches the evidence: follow the money is useless before the event, only confirmatory after the event.

Finally (4), the theory of unforeseen consequences says that the damage done by unintelligent responses will haunt the future of anti-terrorism efforts.

These are the agents that received the money, which was later withdrawn at the Diamond Trust Bank, Eastleigh branch, before it was wired to Somalia. ... The manager of the bank where Nur was withdrawing the money,, Sophia Mbogo, was arrested for failing to report Nur’s suspicious transactions. Nur is said to have made huge withdrawals in short intervals, which Mbogo ought to have reported to relevant authorities, but there is no indication she did so.

Without wishing to compromise the investigation - this looks inept. Eastleigh is the Somali district of Nairobi. It's a bustling centre of trade. In some respects the Somalis are better traders than the Kenyans, and a lot of trade is done. And a lot of that is in cash, because the Kenyan banking system is ... not responsive. Lots of legitimate cash would move in and out of that bank branch.

Given the alleged fact that the money man had 52 M-Pesa accounts, he was certainly aware enough to run under the radar of the branch. Thresholds and actions by banks are no secret, especially by those motivated by terrorism to conduct any crime to find out - bribery, extortion, kidnapping are options.

Maybe there is evidence that the branch or the manager is "in" on the deal. Or maybe there is not, and the Kenyan police have just confirmed the theory that FATF anti-terrorism will do more damage. They've sent a message to all branches to drown their customers in pointless compliance, and to not cooperate with the police.

The Kenyan police had better get a clear and undeniable conviction against the branch manager, or they are going to rue the day. The next terrorist attack will surely be harder.

January 11, 2019

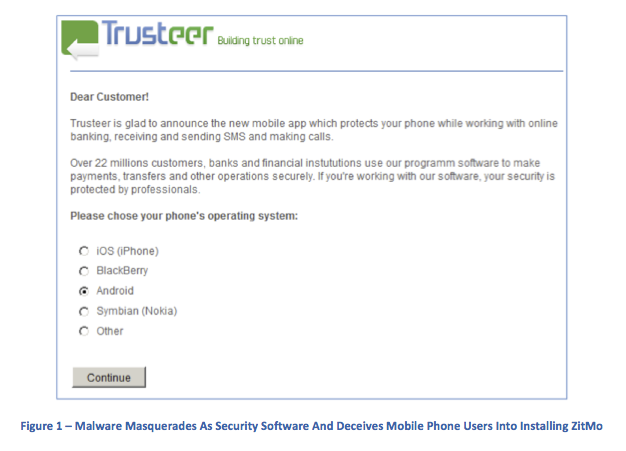

Gresham's Law thesis is back - Malware bid to oust honest miners in Monero

7 years after we called the cancer that is criminal activity in Bitcoin-like cryptocurrencies, here comes a report that suggests that 4.3% of Monero mining is siphoned off by criminals.

A First Look at the Crypto-Mining Malware

Ecosystem: A Decade of Unrestricted Wealth

Sergio Pastrana

Universidad Carlos III de Madrid*

spastran@inf.uc3m.esGuillermo Suarez-Tangil

King’s College London

guillermo.suarez-tangil@kcl.ac.ukAbstract—Illicit crypto-mining leverages resources stolen from victims to mine cryptocurrencies on behalf of criminals. While recent works have analyzed one side of this threat, i.e.: web-browser cryptojacking, only white papers and commercial reports have partially covered binary-based crypto-mining malware. In this paper, we conduct the largest measurement of crypto-mining malware to date, analyzing approximately 4.4 million malware samples (1 million malicious miners), over a period of twelve years from 2007 to 2018. Our analysis pipeline applies both static and dynamic analysis to extract information from the samples, such as wallet identifiers and mining pools. Together with OSINT data, this information is used to group samples into campaigns.We then analyze publicly-available payments sent to the wallets from mining-pools as a reward for mining, and estimate profits for the different campaigns.Our profit analysis reveals campaigns with multimillion earnings, associating over 4.3% of Monero with illicit mining. We analyze the infrastructure related with the different campaigns,showing that a high proportion of this ecosystem is supported by underground economies such as Pay-Per-Install services. We also uncover novel techniques that allow criminals to run successful campaigns.

This is not the first time we've seen confirmation of the basic thesis in the paper Bitcoin & Gresham's Law - the economic inevitability of Collapse. Anecdotal accounts suggest that in the period of late 2011 and into 2012 there was a lot of criminal mining.

Our thesis was that criminal mining begets more, and eventually pushes out the honest business, of all form from mining to trade.

Testing the model: Mining is owned by BotnetsLet us examine the various points along an axis from honest to stolen mining: 0% botnet mining to 100% saturation. Firstly, at 0% of botnet penetration, the market operates as described above, profitably and honestly. Everyone is happy.

But at 0%, there exists an opportunity for near-free money. Following this opportunity, one operator enters the market by turning his botnet to mining. Let us assume that the operator is a smart and careful crook, and therefore sets his mining limit at some non-damaging minimum value such as 1% of total mining opportunity. At this trivial level of penetration, the botnet operator makes money safely and happily, and the rest of the Bitcoin economy will likely not notice.

However we can also predict with confidence that the market for botnets is competitive. As there is free entry in mining, an effective cartel of botnets is unlikely. Hence, another operator can and will enter the market. If a penetration level of 1% is non-damaging, 2% is only slightly less so, and probably nearly as profitable for the both of them as for one alone.

And, this remains the case for the third botnet, the fourth and more, because entry into the mining business is free, and there is no effective limit on dishonesty. Indeed, botnets are increasingly based on standard off-the-shelf software, so what is available to one operator is likely visible and available to them all.

What stopped it from happening in 2012 and onwards? Consensus is that ASICs killed the botnets. Because serious mining firms moved to using large custom rigs of ASICS, and as these were so much more powerful than any home computer, they effectively knocked the criminal botnets out of the market. Which the new paper acknowledged:

... due to the proliferation of ASIC mining, which uses dedicated hardware, mining Bitcoin with desktop computers is no longer profitable, and thus criminals’ attention has shifted to other cryptocurrencies.

Why is botnet mining back with Monero? Presumably because Monero uses an ASIC-resistant algorithm that is best served by GPUs. And is also a heavy privacy coin, which works nicely for honest people with privacy problems but also works well to hide criminal gains.

February 26, 2018

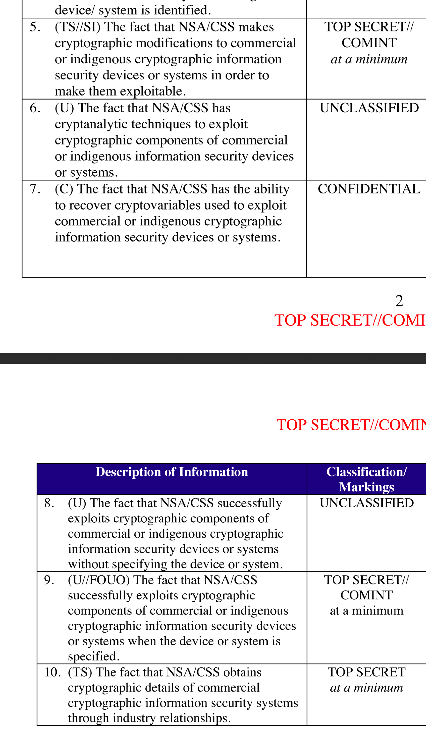

Epidemic of cryptojacking can be traced to escaped NSA superweapon

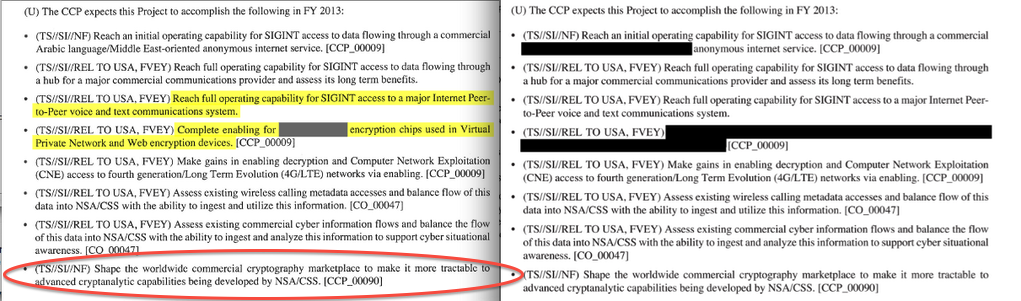

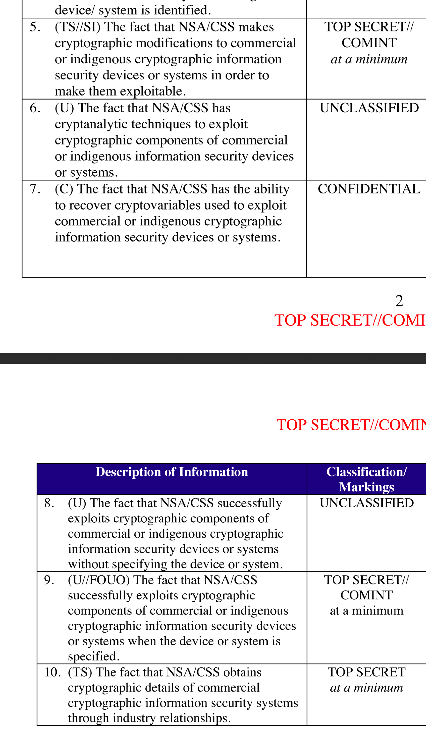

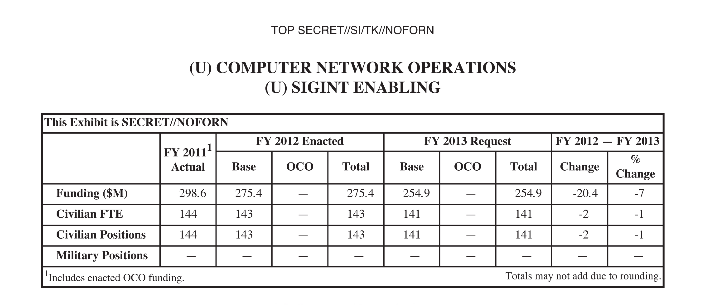

Boingboing writes on the connection between two of the themes often grumbled about in this blog: that Bitcoin muffed the incentives and encourages destructive and toxic behaviour, and that the NSA is the agency that as policy weakens our Internet.

The epidemic of cryptojacking malware isn't merely an outgrowth of the incentive created by the cryptocurrency bubble -- that's just the motive, and the all-important the means and opportunity were provided by the same leaked NSA superweapon that powered last year's Wannacry ransomware epidemic.

It all started when the Shadow Brokers dumped a collection of NSA cyberweapons that the NSA had fashioned from unreported bugs in commonly used software, including versions of Windows. The NSA discovered these bugs and then hoarded them, rather than warning the public and/or the manufacturers about them, in order to develop weapons that turned these bugs into attacks that could be used against the NSA's enemies.

This is only safe if neither rival states nor criminals ever independently rediscover the same bugs and use them to attack your country (they do, all the time), and if your stash of cyberweapons never leaks (oops).

Discovering the subtle bugs the NSA weaponized is sophisticated work that can only be performed by a small elite of researchers; but using these bugs is something that real dum-dums can do, as was evidenced by the hamfisted Wannacry epidemic.

Enter the cryptocurrency bubble: turning malware into money has always been tough. Ransomware criminals have to set up whole call-centers full of tech-support people who help their victims buy the cryptocurrency used to pay the ransom. But cryptojacking cuts out the middleman, stealing your computer to directly generate cash for the malware author. As long as cryptocurrencies continue to inflate, this is a great racket.

Wannamine is a cryptojacker that uses Eternalblue, the same NSA exploit as Wannacry. It's been around since last October, and it's on the rise, extracting Monero from victims' computers.

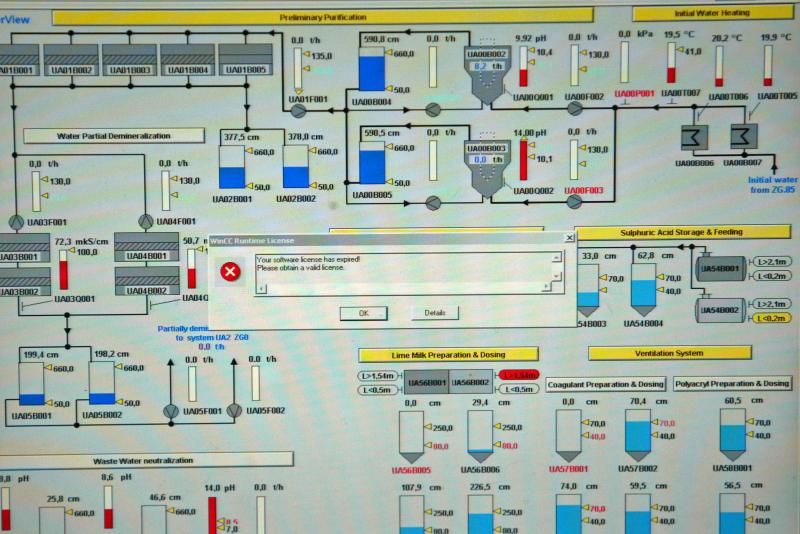

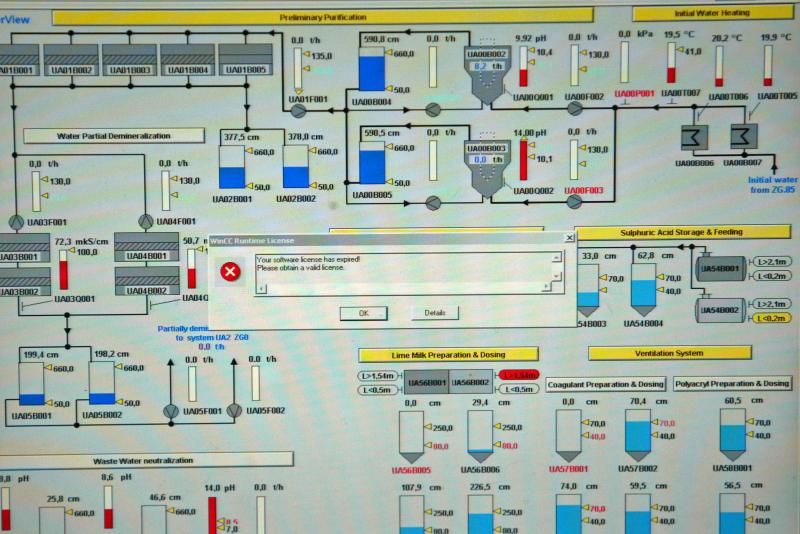

What's more, it's a cryptojacker written by a dum-dum, and it is so incontinent that slows down critical computers to the point of useless, shutting down important IT infrastructure.

WannaMine doesn’t resort to EternalBlue on its first try, though. First, WannaMine uses a tool called Mimikatz to pull logins and passwords from a computer’s memory. If that fails, Wannamine will use EternalBlue to break in. If this computer is part of a local network, like at a company office, it will use these stolen credentials to infect other computers on the network.The use of Mimikatz in addition to EternalBlue is important “because it means a fully patched system could still be infected with WannaMine,” York said. Even if your computer is protected against EternalBlue, then, WannaMine can still steal your login passwords with Mimikatz in order to spread.

Cryptocurrency Mining Malware That Uses an NSA Exploit Is On the Rise [Daniel Oberhaus/Motherboard]

February 20, 2018

Tesla’s cloud was used by hackers to mine cryptocurrency

Just because I get the photo op, here's The Verge on Tesla's operations being cryptojacked.

Tesla’s cloud account was hacked and used to mine cryptocurrency, according to a security research firm. Hackers gained access to the electric car company’s Amazon cloud account, where they were able to view “sensitive data” such as vehicle telemetry.

...

According to RedLock, using Tesla’s cloud account to mine cryptocurrency is more valuable than any data stored within. The cybersecurity firm said in a report released Monday that it estimates 58 percent of organizations that use public cloud services, such as AWS, Microsoft Azure, or Google Cloud, have publicly exposed “at least one cloud storage service.” Eight percent have had cryptojacking incidents.

“The recent rise of cryptocurrencies is making it far more lucrative for cybercriminals to steal organizations’ compute power rather than their data,” RedLock CTO Gaurav Kumar told Gizmodo. “In particular, organizations’ public cloud environments are ideal targets due to the lack of effective cloud threat defense programs. In the past few months alone, we have uncovered a number of cryptojacking incidents including the one affecting Tesla.”

January 04, 2018

Hackers selling access to Aadhar

TRIBUNE INVESTIGATION — SECURITY BREACH

Rs 500, 10 minutes, and you have access to billion Aadhaar details

Group tapping UIDAI data may have sold access to 1 lakh service providers

Rachna Khaira

Tribune News Service

Jalandhar, January 3

It was only last November that the UIDAI asserted that “Aadhaar data is fully safe and secure and there has been no data leak or breach at UIDAI.” Today, The Tribune “purchased” a service being offered by anonymous sellers over WhatsApp that provided unrestricted access to details for any of the more than 1 billion Aadhaar numbers created in India thus far.

It took just Rs 500, paid through Paytm, and 10 minutes in which an “agent” of the group running the racket created a “gateway” for this correspondent and gave a login ID and password. Lo and behold, you could enter any Aadhaar number in the portal, and instantly get all particulars that an individual may have submitted to the UIDAI (Unique Identification Authority of India), including name, address, postal code (PIN), photo, phone number and email.

What is more, The Tribune team paid another Rs 300, for which the agent provided “software” that could facilitate the printing of the Aadhaar card after entering the Aadhaar number of any individual.

When contacted, UIDAI officials in Chandigarh expressed shock over the full data being accessed, and admitted it seemed to be a major national security breach. They immediately took up the matter with the UIDAI technical consultants in Bangaluru.

Sanjay Jindal, Additional Director-General, UIDAI Regional Centre, Chandigarh, accepting that this was a lapse, told The Tribune: “Except the Director-General and I, no third person in Punjab should have a login access to our official portal. Anyone else having access is illegal, and is a major national security breach.”

...

read more.

June 16, 2017

Identifying as an artist - using artistic tools to generate your photo for your ID

Copied without comment:

Artist says he used a computer-generated photo for his official ID card

A French artist says he fooled the government by using a computer-generated photo for his national ID card.

Raphael Fabre posted on his website and Facebook what he says are the results of his computer modeling skills on an official French national ID card that he applied for back in April.

| Le 7 avril 2017, j’ai fait une demande de carte d’identité à la mairie du 18e. Tous les papiers demandés pour la carte étaient légaux et authentiques, la demande a été acceptée et j’ai aujourd’hui ma nouvelle carte d’identité française.

La photo que j’ai soumise pour cette demande est un modèle 3D réalisé sur ordinateur, à l’aide de plusieurs logiciels différents et des techniques utilisées pour les effets spéciaux au cinéma et dans l’industrie du jeu vidéo. C’est une image numérique, où le corps est absent, le résultat de procédés artificiels. L’image correspond aux demandes officielles de la carte : elle est ressemblante, elle est récente, et répond à tous les critères de cadrage, lumière, fond et contrastes à observer. Le document validant mon identité le plus officiellement présente donc aujourd’hui une image de moi qui est pratiquement virtuelle, une version de jeu vidéo, de fiction. | On April 7, 2017, I made a request for identity card at the city hall of the 18th. All the papers requested for the card were legal and authentic, the request was accepted and I now have my new French Identity Card.

The Photo I submitted for this request is a 3 D model on computer, using several different software and techniques used for special effects in cinema and in the video game industry. This is a digital image, where the body is absent, the result of artificial processes. The image corresponds to the official requests of the card: it is similar, it is recent, and meets all the criteria for framing, light, background and contrast to be observed. The document validating my most official identity is now an image of me that is practically virtual, a video game version, fiction. The Portrait, the photo booth and the request receipt were shown at the r-2 Gallery for the Exposition Agora |

The image is 100 percent artificial, he says, made with special-effects software usually used for films and video games. Even the top of his body and clothes are computer-generated, he wrote (in French) on his website.

But it worked, he says. He followed guidelines for photos and made sure the framing, lighting, and size were up to government standards for an ID. And voila: He now has what he says is a real ID card with a CGI picture of himself.

"Absolutely everything is retouched, modified, and idealized"

He told Mashable France that the project was spurred by his interest in discerning what's artificial and real in the digital age. "What interests me is the relationship that one has to the body and the image ... absolutely everything is retouched, modified, and idealized. How do we see the body and identity today?" he said.

In an email, he said that the French government isn't aware yet of his project that just went up on Facebook earlier this week, but "it is bound to happen."

Before he received the ID, the CGI portrait and his application were on display at an Agora exhibit in Paris through the beginning of May.

Now, if the ID is real, it looks like his art was impressive enough to fool the French government.

February 19, 2017

N Reasons why Searching Electronic Devices makes Everyone Unsafe.

The current practice of searching electronic devices makes everyone less safe. Here's several reasons.

The current practice of searching electronic devices makes everyone less safe. Here's several reasons.

1. People's devices will often include their login parameters to online banking or <shudder> digital cash accounts such as Bitcoin. The presence of all this juicy bank account and digital cash information is going to corrupt the people doing the searching work, turning them to seizure.

In the age when security services might detain you until you decrypt your hard drive, or border guards might threaten to deny you entry until you reveal your phone’s PIN, it is only a matter of time before the state authorities discover what Bitcoin hardware wallets are (maybe they did already). When they do, what can stop them from forcing you to unlock and reveal your wallet?

I'm not saying may, I'm saying will. And before you say "oh, but our staff are honest and resistant to corruption," let me say this: you're probably wrong and you just don't know it. Most countries, including the ones currently experimenting with searching techniques, have corruption in them, the only thing that varies is the degree and location.

As we know from the war on drugs, the corruption is pretty much aligned positively with the value that is at risk. As border guards start delving into traveller's electronic devices in the constitution-free zone of the border, they're opened up the traveller's material and disposable wealth. This isn't going to end well.

2. As a response to corruption and/or perceived corruption from the ability for authorities and persons to now see and seize these funds, users or travellers will move away from the safer electronic funding systems to less safe alternates. In the extreme, cash but also consider this a clear signal to use Bitcoin, folks. People used to dealing with online methods of storing value will explore alternates. No matter what we think about banks, they are mostly safer than alternates, at least in the OECD, so this will reduce overall safety.

3. Anyone who actually intends to harm your homeland already knows what you are up to. So, they'll just avoid it. The easy way is to not carry any electronic devices across the border. They'll pick up new devices as they're driving off from the airport.

4. Boom - the entire technique of searching electronic devices is now spent on generating false positives, which are positive hits on the electronic devices of innocent travellers who want to travel not hurt. Which all brings harm to everyone except the bad guys who will be left free because there is nothing to search.

5. This is the slight flaw in my argument that everyone will be less safe: the terrorists will be safer, because they won't be being searched. But, as they intend to harm, their level of safety is very low in the long run.

6. Which will lead to border guards accusing travellers without electronics of being suspicious jihadists. Which will lead real jihadists to start carrying burner phones pre-loaded with 'legends' being personas created for the purpose of tricking border guards.

And, yes, before you ask: it's easier for bad folk to create a convincing legend than it is to spot a legend in the crush of the airport queue.

7. The security industry is already - after only 2 weeks of this crazy policy - talking about how to hide personal data from a device search.

Some of these techniques of hiding the true worth will work. OK, that's the individual's right.

8. Note how you've made the security industry your enemy. I'm not sure how this works to the benefit of anyone, but it is going to make it harder for you to get quality advice in the future.

9. Some of the techniques won't work, leading to discovery, and a presumption that a traveller has something to hide. Therefore /guilty by privacy/ will be branded on innocent people, resulting in more cost to everyone.

10. All of the techniques will lead to an arms race as border guards have to develop newer and better understanding of each dark space in each electronic device, and we the people will have to hunt around for easy dark spaces. When we could all be doing something useful.

11. All of the techniques, working or not, will lower usability and therefore result in less overall security to the user. This is called Kerchkhoffs' 6th principle of security: if the device is too hard to use, it won't be used at all, achieving zero security.

The notion that searching electronic devices could make anyone safer is based on the likelihood of a freak of accident. That is, the chance that some idiotterrorjihadist doesn't follow the instructions from on-high, and actually carries a device on a plane with some real intel on it.

This is a forgettable chance. Someone who is so dumb as to fly on a plane, carrying the plans to blow up the airport on his phone, is unlikely to get out of the bath without slipping and breaking his neck. This is not a suitable operative to deal with the intricacies of some evil plot. Terrorists will know this; they're evil but they are not stupid. They will not let someone so stupid as to carry infringing material onto the plane.

There is zero upside in this tactic. The homeland security people who have been searching electronic devices have summarily destroyed a valuable targetted technique. They have increased harm and damages to everyone, except the people who they think they are chasing, which of course increases the harm to everyone.

October 23, 2016

Bitfinex - Wolves and a sheep voting on what's for dinner

When Bitcoin first started up, although I have to say I admired the solution in an academic sense, I had two critiques. One is that PoW is not really a sustainable approach. Yes, I buy the argument that you have to pay for security, and it worked so it must be right. But that's only in a narrow sense - there's also an ecosystem approach to think about.

When Bitcoin first started up, although I have to say I admired the solution in an academic sense, I had two critiques. One is that PoW is not really a sustainable approach. Yes, I buy the argument that you have to pay for security, and it worked so it must be right. But that's only in a narrow sense - there's also an ecosystem approach to think about.

Which brings us to the second critique. The Bitcoin community has typically focussed on security of the chain, and less so on the security of the individual. There aren't easy tools to protect the user's value. There is excess of focus on technologically elegant inventions such as multisig, HD, cold storage, 51% attacks and the like, but there isn't much or enough focus in how the user survives in that desperate world.

Instead, there's a lot of blame the victim, saying they should have done X, or Y or used our favourite toy or this exchange not that one. Blaming the victim isn't security, it's cannibalism.

Unfortunately, you don't get out of this for free. If the Bitcoin community doesn't move to protect the user, two things will happen. Firstly, Bitcoin will earn a dirty reputation, so the community won't be able to move to the mainstream. E.g., all these people talking about banks using Bitcoin - fantasy. Moms and pops will be and remain safer with money in the bank, and that's a scary thought if you actually read the news.

Secondly, and worse, the system remains vulnerable to collapse. Let's say someone hacks Mt.Gox and makes a lot of money. They've now got a lot of money to invest in the next hack and the next and the next. And then we get to the present day:

Message to the individual responsible for the Bitfinex security incident of August 2, 2016We would like to have the opportunity to securely communicate with you. It might be possible to reach a mutually agreeable arrangement in exchange for an enormous bug bounty (payable through a more privacy-centric and anonymous way).

So it turns out a hacker took a big lump of Bitfinex's funds. However, the hacker didn't take it all. Joseph VaughnPerling tells me:

"The bitfinex hack took just about exactly what bitfinex had in cold storage as business profit capital. Bitfinex could have immediately made all customers whole, but then would have left insufficient working capital. The hack was executed to do the maximal damage without hurting the ecosystem by putting bitfinex out of business. They were sure to still be around to be hacked again later.It is like a good farmer, you don't cut down the tree to get the apples."

A carefully calculated amount, coincidentally about the same as Bitfinex's working capital! This is annoyingly smart of the hacker - the parasite doesn't want to kill the host. The hacker just wants enough to keep the company in business until the next mafiosa-style protection invoice is due.

So how does the company respond? By realising that it is owned. Pwn'd the cool kids say. But owned. Which means a negotiation is due, and better to convert the hacker into a more responsible shareholder or partner than to just had over the company funds, because there has to be some left over to keep the business running. The hacker is incentivised to back off and just take a little, and the company is incentivised to roll over and let the bigger dog be boss dog.

Everyone wins - in terms of game theory and economics, this is a stable solution. Although customers would have trouble describing this as a win for them, we're looking at it from an ecosystem approach - parasite versus host.

But, that stability only survives if there is precisely one hacker. What happens if there are two hackers? What happens when two hackers stare at the victim and each other?

Well, it's pretty easy to see that two attackers won't agree to divide the spoils. If the first one in takes an amount calculated to keep the host alive, and then the next hacker does the same, the host will die. Even if two hackers could convert themselves into one cartel and split the profits, a third or fourth or Nth hacker breaks the cartel.

The hackers don't even have to vote on this - like the old joke about democracy, when there are 2 wolves and 1 sheep, they eat the sheep immediately. The talk about voting is just the funny part for human consumption. Pardon the pun.

The only stability that exists in the market is if there is between zero and one attacker. So, barring the emergence of some new consensus protocol to turn all the individual attackers into one global mafiosa guild, a theme frequently celebrated in the James Bond movies, this market cannot survive.

To survive in the long run, the Bitcoin community have to do better than the banks - much better. If the Bitcoin community wants a future, they have to change course. They have to stop obsessing about the chain's security and start obsessing about the user's security.

The mantra should be, nobody loses money. If you want users, that's where you have to set the bar - nobody loses money. On the other hand, if you want to build an ecosystem of gamblers, speculators and hackers, by all means, obsess about consensus algorithms, multisig and cold storage.

ps; I first made this argument of ecosystem instability in "Bitcoin & Gresham's Law - the economic inevitability of Collapse," co-authored with Philipp Güring.

March 27, 2016

OODA loop of breach patching - Adobe

My measurement of the OODA loop length for the renegotiation bug in SSL was a convenient device to show where we are failing. The OODA loop is famous in military circles for the notion that if your attacker circles faster than you, he wins. Recently, Tudor Dumitras wrote:

To understand security threats, and our ability to defend against them, an important question is "Can we patch vulnerabilities faster than attackers can exploit them?" (to quote Bruce Schneier). When asking this question, people usually think about creating patches for known vulnerabilities before exploits can be developed or discovering vulnerabilities before they can be targeted in zero-day attacks. However, another race may have an even bigger impact on security: once a patch is released, is must also be deployed on all the hosts running the vulnerable software before the vulnerability is exploited in the wild. ....

For example, CVE-2011-0611 affected both the Adobe Flash Player and Adobe Reader (Reader includes a library for playing .swf objects embedded in a PDF). Because updates for the two products were distributed using different channels, the vulnerable host population decreased at different rates, as illustrated in the figure on the left. For Reader patching started 9 days after disclosure (after patch for CVE-2011-0611 was bundled with another patch in a new Reader release), and the update reached 50% of the vulnerable hosts after 152 days. For Flash patching started earlier, 3 days after disclosure, but the patching rate soon dropped (a second patching wave, suggested by the inflection in the curve after 43 days, eventually subsided as well). Perhaps for this reason, CVE-2011-0611 was frequently targeted by exploits in 2011, using both the .swf and PDF vectors.

My comments - it is good to see the meme spreading. I first started talking about how updates are an essential toolkit back in the mid 2000s, as a consequence of my 7 scrappy hypotheses. I've recently spotted the Security folk in IETF starting to talk about it, and the Bitcoin hardfork debate has thrown upgradeability into stark relief. Also, the clear capabilities from Apple to push out updates, the less clear but not awful work by Microsoft in patching, and the disaster that is Android have made it clear:

The future of security includes a requirement to do dynamic updating.

Saying it is harder than doing it, but that's why we're in the security biz.

October 25, 2015

When the security community eats its own...

If you've ever wondered what that Market for Silver Bullets paper was about, here's Pete Herzog with the easy version:

When the Security Community Eats Its Own

BY PETE HERZOG

http://isecom.org

The CEO of a Major Corp. asks the CISO if the new exploit discovered in the wild, Shizzam, could affect their production systems. He said he didn't think so, but just to be sure he said they will analyze all the systems for the vulnerability.

So his staff is told to drop everything, learn all they can about this new exploit and analyze all systems for vulnerabilities. They go through logs, run scans with FOSS tools, and even buy a Shizzam plugin from their vendor for their AV scanner. They find nothing.

A day later the CEO comes and tells him that the news says Shizzam likely is affecting their systems. So the CISO goes back to his staff to have them analyze it all over again. And again they tell him they don’t find anything.

Again the CEO calls him and says he’s seeing now in the news that his company certainly has some kind of cybersecurity problem.

So, now the CISO panics and brings on a whole incident response team from a major security consultancy to go through each and every system with great care. But after hundreds of man hours spent doing the same things they themselves did, they find nothing.

He contacts the CEO and tells him the good news. But the CEO tells him that he just got a call from a journalist looking to confirm that they’ve been hacked. The CISO starts freaking out.

The CISO tells his security guys to prepare for a full security upgrade. He pushes the CIO to authorize an emergency budget to buy more firewalls and secondary intrusion detection systems. The CEO pushes the budget to the board who approves the budget in record time. And almost immediately the equipment starts arriving. The team works through the nights to get it all in place.

The CEO calls the CISO on his mobile – rarely a good sign. He tells the CISO that the NY Times just published that their company allegedly is getting hacked Sony-style.

They point to the newly discovered exploit as the likely cause. They point to blogs discussing the horrors the new exploit could cause, and what it means for the rest of the smaller companies out there who can’t defend themselves with the same financial alacrity as Major Corp.

The CEO tells the CISO that it's time they bring in the FBI. So he needs him to come explain himself and the situation to the board that evening.

The CISO feels sick to his stomach. He goes through the weeks of reports, findings, and security upgrades. Hundreds of thousands spent and - nothing! There's NOTHING to indicate a hack or even a problem from this exploit.

So wondering if he’s misunderstood Shizzam and how it could have caused this, he decides to reach out to the security community. He makes a new Twitter account so people don’t know who he is. He jumps into the trending #MajorCorpFail stream and tweets, "How bad is the Major Corp hack anyway?"

A few seconds later a penetration tester replies, "Nobody knows xactly but it’s really bad b/c vendors and consultants say that Major Corp has been throwing money at it for weeks."

Read on for the more deeper analysis.

April 03, 2015

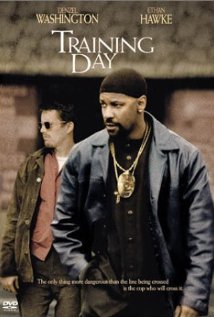

Training Day 2: starring Bridges & Force

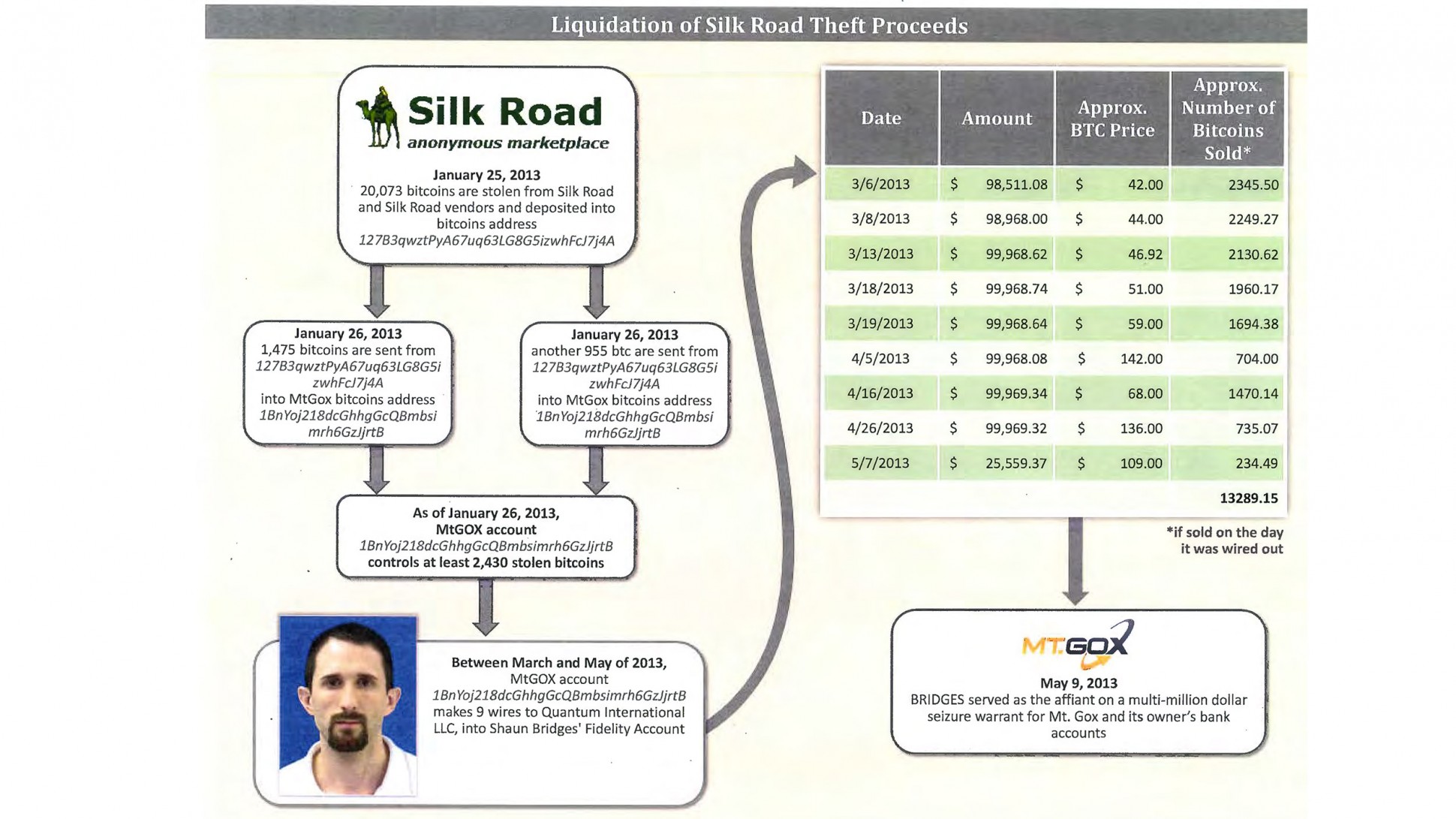

Readers might have probably been watching the amazing story of the Bridges & Force arrests in USA. It's starting to look much like a film, and the one I have in mind is this: Training Day.

Readers might have probably been watching the amazing story of the Bridges & Force arrests in USA. It's starting to look much like a film, and the one I have in mind is this: Training Day.

In short: two agents were sent in to bring down the Silk Road website for selling anything (guns, drugs, etc). In the process, the agents stole a lot of the money. And in the process, went on a rampage through the Bitcoin economy robbing, extorting, and manipulating their way to riches.

You can't make this up. Worse, we don't need to. The problem is deep, underlying and demented within our society. We're going to see much more of it, and the reason we know this is that we have decades of experience in other countries outside the OECD purview.

This is our own actions coming back to destroy us. In a nutshell here it is, here is the short story that gets me on the FATF's blacklist and you too if you spread it:

In the 1980s, certain European governments got upset about certain forms of arbitrage across nations by multinationals and rich folk. These people found a ready consensus with others in policing work who said that "follow the money" was how you catch the really bad people, a.k.a. criminals. Between these two groups of public servants they felt they could crack open the bank secrecy that was protecting criminals and rich people alike.

So the Anti Money Laundering or AML project was born, under the aegis of FATF or financial action task force, an office created in Paris under OECD. Their concept was that they put together rules about how to stop bad money moving through the system. In short: know your customer, and make sure their funds were good. Add in risk management and suspicious activity reporting and you're golden.

On passing these laws, every politician faithfully promised it was only for the big stuff, drugs and terrorism, and would never be used against honest or wealthy or innocent people. Honest Injun!

If only so simple. Anyone who knows anything about crime or wealth realises within seconds that this is not going to achieve anything against the criminals or the wealthy. Indeed, it may even make matters worse, because (a) the system is too imperfect to be anything but noise, (b) criminals and wealthy can bypass the system, and (c) criminals can pay for access. Hold onto that thought.

So, if the FATF had stopped there, then AML would have just been a massive cost on society. Westerners would paid basis points for nothing, and it would have just been a tool that shut the poor out of the financial system; something some call the problem of the 'unbanked' but that's a subject for another day (and don't use that term in my presence, thanks!). Criminals would have figured out other methods, etc.

If only. Just. But they went further.

In imposing the FATF 40 recommendations (yes, it got a lot more complicated and detailed, of course) everyone everywhere everytime also stumbled on an ancient truth of bureaucracy without control: we could do more if we had more money! Because of course the society cost of following AML was also hitting the police, implementing this wonderful notion of "follow the money" cost a lot of money.

Until someone had the bright idea: if the money is bad, why can't we seize the bad money and use it to find more bad money?

Until someone had the bright idea: if the money is bad, why can't we seize the bad money and use it to find more bad money?

And so, it came to pass. The centuries-honoured principle of 'consolidated revenue' was destroyed and nobody noticed because "we're stopping bad people." Laws and regs were finagled through to allow money seized from AML operations to be then "shared" across the interested good parties. Typically some goes to the local police, and some to the federal justice. You can imagine the heated discussions about percentage sharing.

What could possibly go wrong?

Now the police were empowered not only to seize vast troves of money, but also keep part of it. In the twinkling of an eye, your local police force was now incentivised to look at the cash pile of everyone in their local society and 'find' a reason to bust. And, as time went on, they built their system to be robust to errors: even if they were wrong, the chances of any comeback were infinitesimal and the take might just be reduced.

AML became a profit center. Why did we let this happen? Several reasons:

1. It's in part because "bad guys have bad money" is such a compelling story that none dare question those who take "bad money from bad guys."

Indeed, money laundering is such a common criminal indictment in USA simply because people assume it's true on the face of it. The crime itself is almost as simple as moving a large pot of money around, which if you understand criminal proceedings, makes no sense at all. How can moving a large pot of money around be proven as ML before you've proven a predicate crime? But so it is.

2. How could we as society be so stupid? It's because the principle of 'consolidated revenue' has been lost in time. The basic principle is simple: *all* monies coming into the state must go to the revenue office. From there they are spent according to the annual budget. This principle is there not only for accountability but to stop the local authorities becoming the bandits The concept goes back all the way to the Magna Carta which was literally and principally about the barons securing the rights to a trial /over arbitrary seizure of their wealth/.

We dropped the ball on AML because we forgot history.

So what's all this to do with Bridges & Force? Well, recall that thought: the serious criminals can buy access. Which of course they've been doing since the beginning, the AML authorities themselves are victims to corruption.

As the various insiders in AML are corrupted, it becomes a corrosive force. Some insiders see people taking bribes and can't prove anything. Of course, these people aren't stupid, these are highly trained agents. Eventually they work out how they can't change anything and the crooks will never be ousted from inside the AML authorities. And they start with a little on the side. A little becomes a lot.

Every agent in these fields is exposed to massive corruption right from the start. It's not as if agents sent into these fields are bad. Quite the reverse, they are good and are made bad. The way AML is constructed it seems impossible that there could be any other result - Quis custodiet ipsos custodes? or Who watches the watchers?

Remember the film Training Day ? Bridges and Force are a remake, a sequel, this time moved a bit further north and with the added sex appeal of a cryptocurrency.

But the important things to realise is that this isn't unusual, it's embedded. AML is fatally corrupted because (a) it can't work anyway, and (b) they breached the principle of consolidated revenue, (c) turned themselves into victims, and then (d) the bad guys.

Until AML itself is unwound, we can't ourselves - society, police, authorities, bitcoiners - get back to the business of fighting the real bad guys. I'd love to talk to anyone about that, but unfortunately the agenda is set. We're screwed as society until we unwind AML.

February 16, 2015

Google's bebapay to close down, Safaricom shows them how to do it

In news today, BebaPay, the google transit payment system in Nairobi, is shutting down. As predicted in this blog, the payment system was a disaster from the start, primarily because it did not understand the governance (aka corruption) flow of funds in the industry. This resulted in the erstwhile operators of the system conspiring to make sure it would not work.

How do I know this? I was in Nairobi when it first started up, and we were analysing a lot of market sectors for payments technology at the time. It was obvious to anyone who had actually taken a ride on a Matatu (the little buses that move millions of Kenyans to work) that automating their fares was a really tough sell. And, once we figured out how the flow of funds for the Matatu business worked, from inside sources, we knew a digital payments scheme was dead on arrival.

As an aside there is a play that could have been done there, in a nearby sector, which is the tuk-tuks or motorbike operators that are clustered at every corner. But that's a case-study for another day. The real point to take away here is that you have to understand the real flows of money, and when in Africa, understand that what we westerners call corruption means that our models are basically worthless.

Or in shorter terms, take a ride on the bus before you decide to improve it.

Meanwhile, in other news, Safaricom are now making a big push into the retail POS world. This was also in the wings at the time, and when I was there, we got the inside look into this field due to a friend who was running a plucky little mPesa facilitation business for retails. He was doing great stuff, but the elephant in the room was always Safaricom, and it was no polite toilet-trained beast. Its reputation for stealing other company's business ideas was a legend; in the payment systems world, you're better off modelling Safaricom as a bank.

Ah, that makes more sense... You'll note that Safaricom didn't press over-hard to enter the transit world.

The other great takeway here is that westerners should not enter into the business of Africa lightly if at all. Westerners' biggest problem is that they don't understand the conditions there, and consequently they will be trapped in a self-fulfilling cycle of western psuedo-economic drivel. Perhaps even more surprising, they also can't turn to their reliable local NGOs or government partners or consultancies because these people are trained & paid by the westerners to feed back the same academic models.

How to break out of that trap economically is a problem I've yet to figure out. I've now spent a year outside the place, and I can report that I have met maybe 4 or 5 people amongst say 100 who actually understand the difference? Not a one of these is employed by an NGO, aid department, consultant, etc. And, these impressive organisations around the world that specialise in Africa are in this situation -- totally misinformed and often dangerously wrong.

How to break out of that trap economically is a problem I've yet to figure out. I've now spent a year outside the place, and I can report that I have met maybe 4 or 5 people amongst say 100 who actually understand the difference? Not a one of these is employed by an NGO, aid department, consultant, etc. And, these impressive organisations around the world that specialise in Africa are in this situation -- totally misinformed and often dangerously wrong.

I feel very badly for the poor of the world, they are being given the worst possible help, with the biggest smile and a wad of cash to help it along its way to failure.

Which leads me to a pretty big economic problem - solving this requires teaching what I learnt in a few years over a single coffee - can't be done. I suspect you have to go there, but even that isn't saying what's what.

Luckily however the developing world -- at least the parts I saw in Nairobi -- is now emerging with its own digital skills to address their own issues. Startup labs abound! And, from what I've seen, they are doing a much better job at it than the outsiders.

So, maybe this is a problem that will solve itself? Growth doesn't happen at more than 10% pa, so patience is perhaps the answer, not anger. We can live and hope, and if an NGO does want to take a shot at the title, I'm in for the 101th coffee.

December 03, 2014

MITM watch - patching binaries at Tor exit nodes

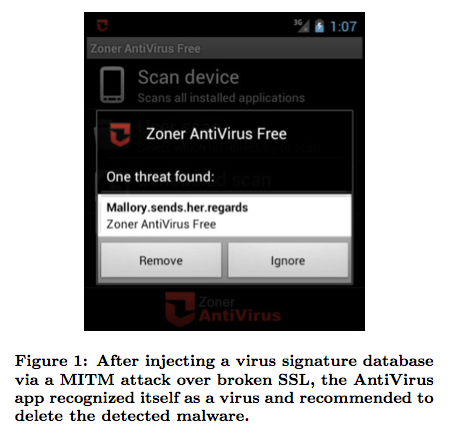

The real MITMs are so rare that protocols that are designed around them fall to the Bayesian impossibility syndrome (*). In short, false negatives cause the system to be ignored, and when the real negative indicator turns up it is treated as a false. Ignored. Fail.

Here's some evidence of that with Tor:

... I tested BDFProxy against a number of binaries and update processes, including Microsoft Windows Automatic updates. The good news is that if an entity is actively patching Windows PE files for Windows Update, the update verification process detects it, and you will receive error code 0x80200053..... If you Google the error code, the official Microsoft response is troublesome.

If you follow the three steps from the official MS answer, two of those steps result in downloading and executing a MS 'Fixit' solution executable. ... If an adversary is currently patching binaries as you download them, these 'Fixit' executables will also be patched. Since the user, not the automatic update process, is initiating these downloads, these files are not automatically verified before execution as with Windows Update. In addition, these files need administrative privileges to execute, and they will execute the payload that was patched into the binary during download with those elevated privileges.

(*) I'd love to hear a better name than Bayesian impossibility syndrome, which I just made up. It's pretty important, it explains why the current SSL/PKI/CA MITM protection can never work, relying on Bayesian statistics to explain why infrequent real attacks cannot be defended against when overshadowed by frequent false negatives.

September 22, 2014

More on Useful Proof of Work

Since posting that thought balloon on proof of work and its unfortunate social costs, I've been informed in private conversation that there are two considerations that I missed:

1. if the cost of the proof of work is brought down to zero by means of some re-use of the hashing, whatever that is, then we bring into question the security of the network. The network is secured literally because it costs to vote. If the vote is free, in some sense or other, then those with a free vote can seek to dominate.2. the proof of work suffers a security weakness in that it must be verifiable at much lower cost than doing the work, as otherwise an attacker can provide junk proofs.

On the first, it is somewhat clear that this is the case in grand principle, but examining the edge cases reveals some exceptions. Take for example a 1 kilowatt hashing unit that could be used to simply provide building heat. If I use the waste output to heat my house, this is considered to result in a free vote. But this is not an adequate attack at the 51% level; my house isn't that big.

In apparent paradox, PoW as heating leads to more security because if I can heat my house and vote for the price, getting both for the price of one, it is still an effect that is capped at very low shares of the overall hashset. So, the result is more distribution in hashing and mining, rather than less, and this is a defence against today's more worrying trend being the concentration of mining in few people's hands.

More distribution directly attacks one of the current flaws of the current system. So how about this: instead of selling the latest hardware in Megawatt sizes, sell some smaller units in kilowatt sizes. I'd happily run a kilowatt grade heater instead of a bar heater, especially if it had a little panel on it showing its progress on voting, and it occasionally purred as it earned some proportion of heating costs back in shares in a hashpool.

On the second: cheaply verifiable problems are rare but they are not non-existent. Specifically, verification of some public key signatures is one. So why can't the PoW be refactored to make public key signatures?

For example, big SSL servers often outsource the hard crypto to accelerators and so forth; if the hard iron being built now could also be used for that factor, two things become possible. One is that the research effort being expended could be shared across the two requirements. Right now, all this ASIC building is resulting in some usefully fast petahashing but that has only limited spin-off potential beyond bitcoin mining.

The second plausibility, when a bitcoin mining rig passes its 6 months viable window, and becomes just more mining excrement, it can then be re-purposed to some other task. I would happily pay $1000 for something that hypothetically sped up my signature signing or verification by a factor of a 1000. The fact that this device would have fetched 100 times as much 6 months back and scored me 25 BTC every week isn't a problem, but an opportunity: for both myself and the old owner.

How would this be done in practice? Well, I want to load up my key in the device and run sigs through it. So we would need a design where each block's PoW were dependent on the full operation of a private/public key and hash signing process. E.g., take the last block, use the entropy within it to feed a known DRGB, create a deterministic private key pair, and load that up. Keep feeding the PoW machine new signature inputs ...

But, these ruminations aside, it appears that the socially unbeneficial criticism of PoW is a bent arrow. The purpose of PoW is much more subtle than might seem from first appearances. I think it is important to find a more socially aligned method, but it seems equally likely that the existing PoW of petahashing will be very hard to dislodge.

September 03, 2014

Proof of Work made useful -- auctioning off the calculation capacity is just another smart contract

Just got tipped to Andrew Poelstra's faq on ASICs, where he says of Adam Back's Proof of Work system in Bitcoin:

Just got tipped to Andrew Poelstra's faq on ASICs, where he says of Adam Back's Proof of Work system in Bitcoin:

In places where the waste heat is directly useful, the cost of mining is merely the difference between electric heat production and ordinary heat production (here in BC, this would be natural gas). Then electricity is effectively cheap even if not actually cheap.

Which is an interesting remark. If true -- assume we're in Iceland where there is a need for lots of heat -- then Bitcoin mining can be free at the margin. Capital costs remain, but we shouldn't look a gift horse in the mouth?

My view remains, and was from the beginning of BTC when Satoshi proposed his design, that mining is a dead-weight loss to the economy because it turns good electricity into bad waste, heat. And, the capital race adds to that, in that SHA2 mining gear is solely useful for ... Bitcoin mining. Such a design cannot survive in the long run, which is a reflection of Gresham's law, sometimes expressed as the simplistic aphorism of "bad money drives out good."

Now, the good thing about predicting collapse in the long run is that we are never proven wrong, we just have to wait another day ... but as Ben Laurie pointed out somewhere or other, the current incentives encourage the blockchain mining to consume the planet, and that's not another day we want to wait for.

Not a good thing. But if we switch production to some more socially aligned pattern /such as heating/, then likely we could at least shift some of the mining to a cost-neutrality.

Why can't we go further? Why can't we make the information calculated socially useful, and benefit twice? E.g., we can search for SETI, fold some DNA, crack some RSA keys. Andrew has commented on that too, so this is no new idea:

7. What about "useful" proofs-of-work?These are typically bad ideas for all the same reasons that Primecoin is, and also bad for a new reason: from the network's perspective, the purpose of mining is to secure the currency, but from the miner's perspective, the purpose of mining is to gain the block reward. These two motivations complement each other, since a block reward is worth more in a secure currency than in a sham one, so the miner is incentivized to secure the network rather than attacking it.

However, if the miner is motivated not by the block reward, but by some social or scientific purpose related to the proof-of-work evaluation, then these incentives are no longer aligned (and may in fact be opposed, if the miner wants to discourage others from encroaching on his work), weakening the security of the network.

I buy the general gist of the alignments of incentives, but I'm not sure that we've necessarily unaligned things just by specifying some other purpose than calculating a SHA2 to get an answer close to what we already know.

Let's postulate a program that calculates some desirable property. Because that property is of individual benefit only, then some individual can pay for it. Then, the missing link would be to create a program that takes in a certain amount of money, and distributes that to nodes that run it according to some fair algorithm.

What's a program that takes in and holds money, gets calculated by many nodes, and distributes it according to an algorithm? It's Nick Szabo's smart contract distributed over the blockchain. We already know how to do that, in principle, and in practice there are many efforts out there to improve the art. Especially, see Ethereum.

So let's assume a smart contract. Then, the question arises how to get your smart contract accepted as the block calculation for 17:20 on this coming Friday evening? That's a consensus problem. Again, we already know how to do consensus problems. But let's postulate one method: hold a donation auction and simply order these things according to the amount donated. Close the block a day in advance and leave that entire day to work out which is the consensus pick on what happens at 17:20.

So let's assume a smart contract. Then, the question arises how to get your smart contract accepted as the block calculation for 17:20 on this coming Friday evening? That's a consensus problem. Again, we already know how to do consensus problems. But let's postulate one method: hold a donation auction and simply order these things according to the amount donated. Close the block a day in advance and leave that entire day to work out which is the consensus pick on what happens at 17:20.

Didn't get a hit? If your smart contract doesn't participate, then at 17:30 it expires and sends back the money. Try again, put in more money? Or we can imagine a variation where it has a climbing ramp of value. It starts at 10,000 at 17:20 and then adds 100 for each of the next 100 blocks then expires. This then allows an auction crossing, which can be efficient.

An interesting attack here might be that I could code up a smartcontract-block-PoW that has a backdoor, similar to the infamous DUAL_EC random number generator from NIST. But, even if I succeed in coding it up without my obfuscated clause being spotted, the best I can do is pay for it to reach the top of the rankings, then win my own payment back as it runs at 17:20.

With such an attack, I get my cake calculated and I get to eat it too. As far as incentives go to the miner, I'd be better off going to the pub. The result is still at least as good as Andrew's comment, "from the network's perspective, the purpose of mining is to secure the currency."

What about the 'difficulty' factor? Well, this is easy enough to specify, it can be part of the program. The Ethereum people are working on the basis of setting enough 'gas' to pay for the program, so the notion of 'difficulty' is already on the table.

I'm sure there is something I haven't thought of as yet. But it does seem that there is more of a benefit to wring from the mining idea. We have electricity, we have capital, and we have information. Each of those is a potential for a bounty, so as to claw some sense of value back instead of just heating the planet to keep a bunch of libertarians with coins in their pockets. Comments?

August 14, 2014

Heartbleed v Ethereum v Tezos: has the Open Source model utterly failed to secure the world's infrastructure? Or is there a missing trick here?

L.M. Goodman stated in a recent paper on Tezos:

L.M. Goodman stated in a recent paper on Tezos:

"The heartbleed bug caused millions of dollars in damages."

To which I asked what the cites were. His immediate response (thanks!) was "Nothing very academic" but the links were very interesting in and of themselves.

First up, a number of the cost of Heartbleed:

....To put an actual number on it, given some historical precedence, I think $500 million is a good starting point [to the cost of Heartbleed].

So, read the entire article for your view, but I'll take the $500m as given for this post. It's a number, right? Then:

Big tech companies offer millions after Heartbleed crisis Thu, Apr 24 12:00 PM EDT By Jim FinkleBOSTON (Reuters) - The world's biggest technology companies are donating millions of dollars to fund improvements in open source programs like OpenSSL, the software whose "Heartbleed" bug has sent the computer industry into turmoil.

Amazon.com Inc, Cisco Systems Inc, Facebook Inc, Google Inc, IBM, Intel Corp and Microsoft Corp are among a dozen companies that have agreed to be founding members of a group known as Core Infrastructure Initiative. Each will donate $300,000 to the venture, which is recruiting more backers among technology companies as well as the financial services sector.

Other early supporters are Dell, Fujitsu Ltd NetApp Inc, Rackspace Hosting Inc and VMware Inc.

The industry is stepping up after the group of developers who volunteer to maintain OpenSSL revealed that they received donations averaging about $2,000 a year to support the project, whose code is used to secure two-thirds of the world's websites and is incorporated into products from many of the world's most profitable technology companies.

What is truly very outstanding is that last number: $2000 a year supports an infrastructure which the world's websites reside on.

Which infrastructure was hit by a minor glitch which caused $500m of costs.

This is a wtf moment! What can we conclude from this 250,000 to 1 ratio? Try these thoughts on for size:

- Open source drives the SSL business because Apache, Chrome and Mozilla control the lions share of activity in SSL. Has the model of open source failed to keep ecommerce reasonably secured? What appears clearer is that the open source model adds nothing to the accounting for the value to society of this infrastructure. We could argue that accounting isn't its job, but actually some proponents argue vociferously that source code should not be charged for, which is an accounting statement. So I'd say this is a germane point, because the marketing of the open source community may be making us less secure if OpenSSL developers find it hard to charge for their work.

- The "many eyeballs" theory is open source's main claim to security. Is this a sick joke which just cost society $500m or is this an outlier never to be repeated? Or proof that it's working?

- This all isn't to say that the paid model is better, the paid alternative includes its disasters. But the paid model does typically carry liability and allocate maintenance out of the revenues. Open source doesn't seem to do that.

- Echoes of Y2K -- even though the combined spend was $500m, we still see no damages. No bad guys slipped in and stole any money, that we know of. Yes, there was one attack on CRA which cost a few hundred data sets, but again because the damage was caught before, we simply don't know whether spending $500m saved us anything.

-

The direct cause of costs here is one of upgrade. A sysadm wants to hit the button, and upgrade from BAD OpenSSL to GOOD. Why is that so hard? How do you upgrade SSL? Fixing bugs works in slow time because of burdensome commit privileges and the long supply chain, putting through protocol changes works in even slower times. At the protocol level, the IETF working group process is good at adding in algorithms (around 350 available, yoohoo!) but has no answer for taking things away; the combined effect of these 'essential processes' leads to an OODA cycle of 3.5 years to 80% rollout, as measured over the renegotiation bug.

The direct cause of costs here is one of upgrade. A sysadm wants to hit the button, and upgrade from BAD OpenSSL to GOOD. Why is that so hard? How do you upgrade SSL? Fixing bugs works in slow time because of burdensome commit privileges and the long supply chain, putting through protocol changes works in even slower times. At the protocol level, the IETF working group process is good at adding in algorithms (around 350 available, yoohoo!) but has no answer for taking things away; the combined effect of these 'essential processes' leads to an OODA cycle of 3.5 years to 80% rollout, as measured over the renegotiation bug.

This is not an attack on the people, and the ones I've met are not bad people, diligently doing their part. This is an attack on the change process, which sucks, today at a power of 250,000 to one.

$500,000,000 ⇒ $5,000,000 → $2,000

This is a widespread, burning issue, so let's look at two positive lessons from the Bitcoin world.

Bitcoin faces the same developer shortage. As Bitcoin developers get snapped up by well-heeled startup ventures with millions in VC money, and as the altCoins and side-chains and ripples and ethereums and now Tezos snap at heels with alternatives, the need for change goes up while the developer availability goes down. L.M. Goodman which makes the same point that upgrade is the archilles heel of all successful software systems:

Abstract: The popularization of Bitcoin, a decentralized crypto-currency has inspired the production of several alternative, or "alt", currencies. Ethereum, CryptoNote, and Zerocash all represent unique contributions to the crypto-currency space. Although most alt currencies harbor their own source of innovation, they have no means of adopting the innovations of other currencies which may succeed them.

Is this the same thing that happened to OpenSSL?

As an emerging model, new startups such as Ripple and Ethereum have done pre-mines: massive creation of paper value before letting loose the system in the wild. These paper values are then hoarded in foundations in order to pay for developers. As the system becomes popular, the value rises and more developers can be paid for.

Now, leaving aside the obvious problems of self-enrichment and bubble-blowing, it is at least a way to address the problems highlighted by the Heartbleed response above. For example, last Friday, Gavin Woods stated that Ethereum had raised $15m or so in BTC before they'd even shipped a real money client, which puts them several times ahead of OpenSSL. Not shabby, especially compared to the combined efforts of the world's powerful tech cabal.

Now, leaving aside the obvious problems of self-enrichment and bubble-blowing, it is at least a way to address the problems highlighted by the Heartbleed response above. For example, last Friday, Gavin Woods stated that Ethereum had raised $15m or so in BTC before they'd even shipped a real money client, which puts them several times ahead of OpenSSL. Not shabby, especially compared to the combined efforts of the world's powerful tech cabal.

And, stupidly thousands of times ahead of OpenSSL's contributions pittance ot $2000 per year.

Of course, this situation only applies to a very cool segment of the market: those cryptocurrencies which manage to garner mass attention. But it does raise a theoretical possibility at least: imagine if every open source project were also to issue their own currency?

And do their pre-mine, with say 50% reserved for developers? Obviously, it's valueless stuff at the start ... until the project booms in popularity, and the currency rises in value. Which is the alignment we want -- cash for programmers as the software starts to prove itself.

Think about a new model of open source + foundation + pre-mine -- if OpenSSL or Eclipse or Firefox were their own money, they'd also solve the problem of paying for developers. (The obvious problem of "Eclipse is not a currency" is just your problem in experience, contact any experienced financial cryptographer for how to solve that.)

Then, once you've got the money, how does it get spent? Upgrade is also a huge problem for the Bitcoin world. Adam Back has proposed two-way pegging to address the need to set up side chains for development purposes and also altCoin purposes. I've heard other ideas too, and for once, Microsoft and Apple are on the right side here with their patch Tuesdays and App Store processes.

Close with Goodman again:

We aim to remedy the potential for atrophied evolution in the crypto-currency space by presenting Tezos, a generic and self-amending crypto-ledger. Tezos can instanciate any blockchain based protocol. Its seed protocol specifies a procedure for stakeholders to approve amendments to the protocol, including amendments to the amendment procedure itself. Upgrades to Tezos are staged through a testing environment to allow stakeholders to recall potentially problematic amendments.

Maybe the new model is open source + foundation + pre-mine + dynamic upgrade?

July 23, 2014

on trust, Trust, trusted, trustworthy and other words of power

Follows is the clearest exposition of the doublethink surrounding the word 'trust' that I've seen so far. This post by Jerry Leichter on Crypto list doesn't actually solve the definitional issue, but it does map out the minefield nicely. Trustworthy?

On Jul 20, 2014, at 1:16 PM, Miles Fidelman <...> wrote:

>> On 19/07/2014 20:26 pm, Dave Horsfall wrote:

>>>

>>> A trustworthy system is one that you *can* trust;

>>> a trusted system is one that you *have* to trust.

>>

> Well, if we change the words a little, the government

> world has always made the distinction between:

> - certification (tested), and,

> - accreditation (formally approved)

The words really are the problem. While "trustworthy" is pretty unambiguous, "trusted" is widely used to meant two different things: We've placed trust in it in the past (and continue to do so), for whatever reasons; or as a synonym for trustworthy. The ambiguity is present even in English, and grows from the inherent difficulty of knowing whether trust is properly placed: "He's a trusted friend" (i.e., he's trustworthy); "I was devastated when my trusted friend cheated me" (I guess he was never trustworthy to begin with).

In security lingo, we use "trusted system" as a noun phrase - one that was unlikely to arise in earlier discourse - with the *meaning* that the system is trustworthy.